Interpret the data

Monitor Chatbot Performance

Identify whether the chatbot that you designed performs according to your assumptions. You can identify the following:

- Is there a large discrepancy between total and engaged sessions?

- Do end users return to conversations?

- Use CSAT and get feedback from your end users.

- Do many users request agent help or do agents need to take over conversations?

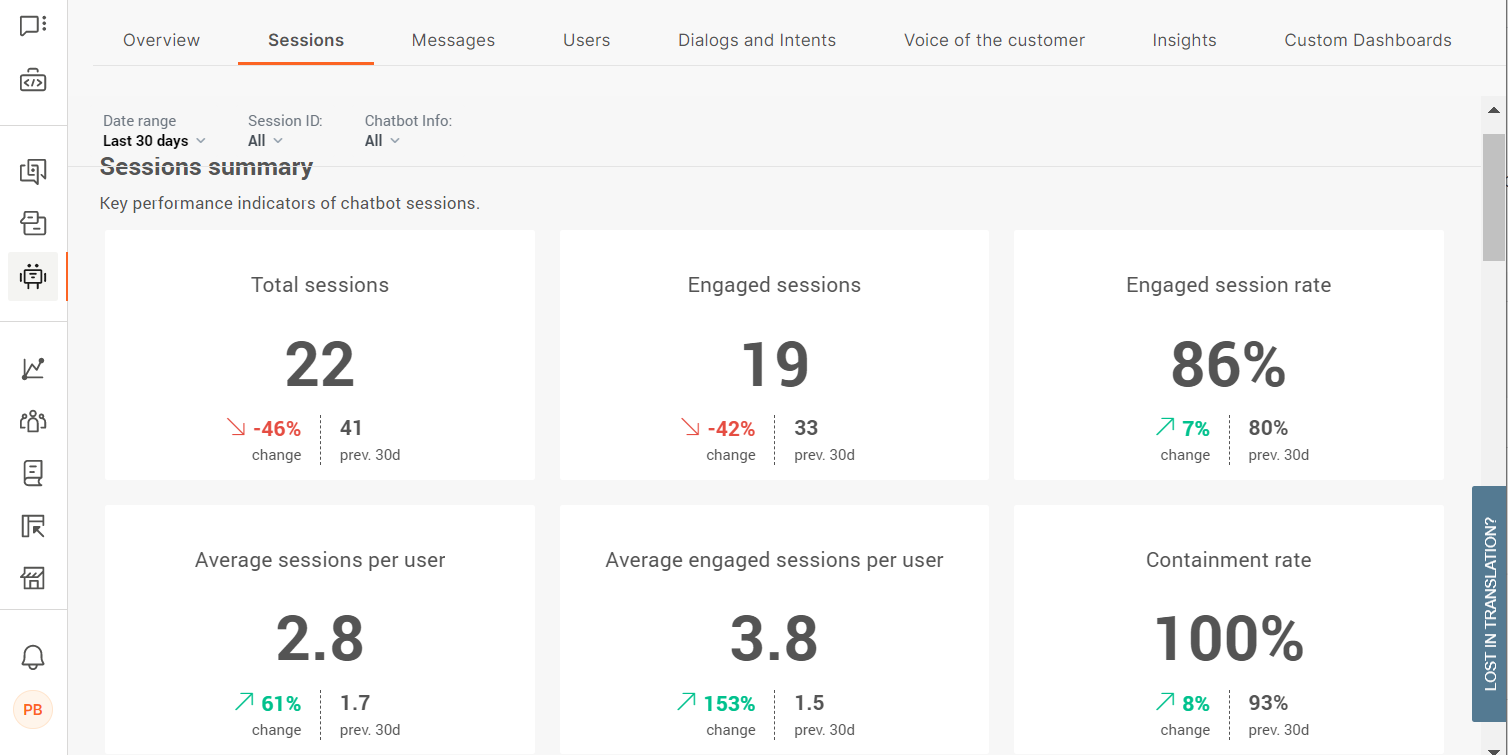

Identify if there is a discrepancy between total and engaged sessions

In the Sessions tab > Sessions summary section, you can see the total number of sessions and the number of engaged sessions.

An engaged session indicates that the end user continued the conversation with the chatbot after they sent the initial message. So, it is preferable for sessions to be engaged.

If you notice that that are large number of sessions are not engaged, it could mean one or more of the following:

- Your end users lose interest in talking with the chatbot after they receive its reply. Identify how to make the initial message from the chatbot more engaging. Consider whether end users know how to respond to the chatbot and offer options or examples of messages that they can send to the chatbot. Explain what the chatbot can do for them.

- Instead of an end user, another chatbot is sending messages to your chatbot. To check whether this might be the case, go to the Users tab and click the suspected User ID. Then, click the Session ID to see all the messages in the conversation.

- The chatbot does not know how to reply to the end users' messages. The reason could be that the chatbot cannot identify the intent of the initial message and reply to it correctly. Check whether the end users' initial messages are part of your chatbot's training. Add these messages as keywords (rule-based chatbots) or training phrases (AI chatbots).

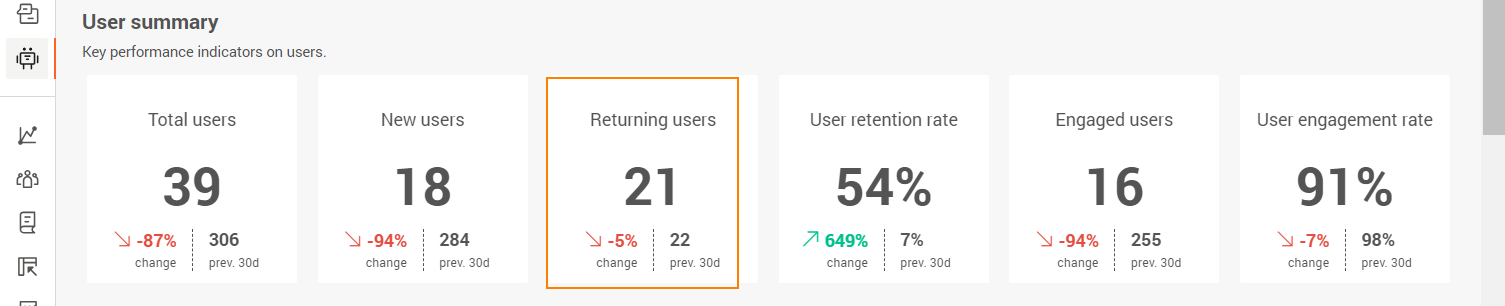

Identify how many end users return

Depending on the type of chatbot, you might want your end users to return and have new conversations with your chatbot.

Example: If you have a retail chatbot, a large number of returning end users is desirable because it indicates that the end users liked the experience. But if you have a chatbot that does first line of support, a large number of returning end users indicates that their queries were not resolved the first time they contacted your chatbot.

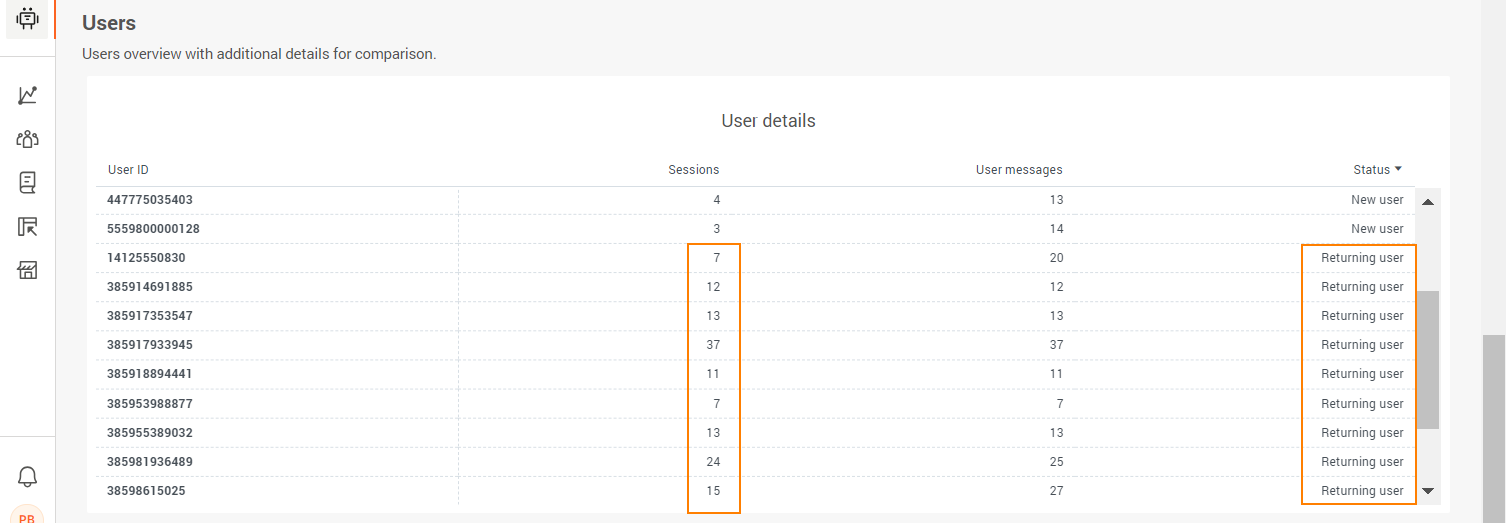

To see the number of returning users, go to the Users tab.

See the number of sessions for returning users. If an end user has a large number of sessions, go to the Sessions tab. Click the Session IDs for the end user to see all the messages in the conversation and identify what challenges they faced.

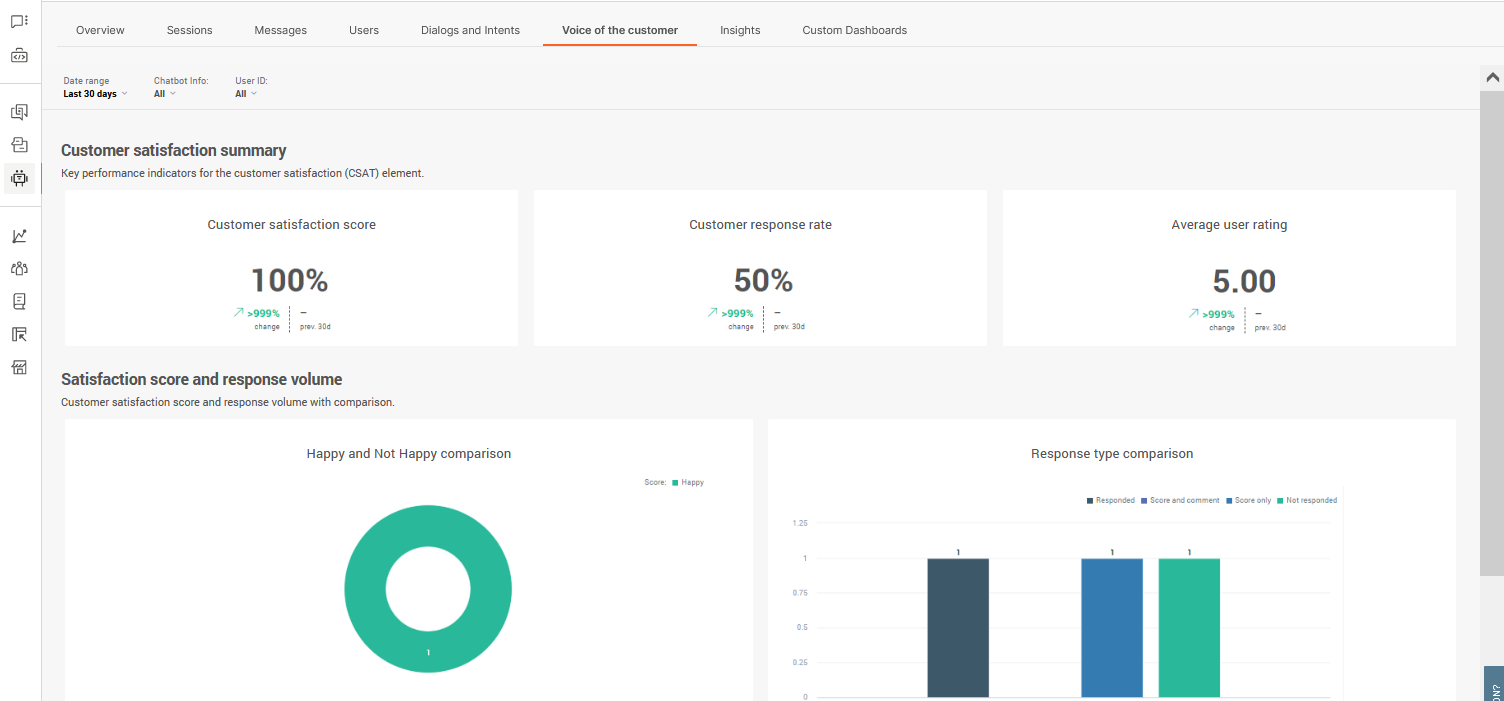

Use customer satisfaction score

Use customer satisfaction (CSAT (opens in a new tab)) score to see how satisfied your end users are after they finish their conversation with the chatbot.

To see CSAT information, you must have CSAT configured in your chatbot. Refer to the documentation for the CSAT element.

After you configure CSAT, you can check how many end users are happy or unhappy. CSAT enables end users to leave comments, which can help you identify what frustrations they face or what makes them happy.

To view the CSAT information, go to the Voice of the Customer tab.

How many dialogs request agent assistance?

An end user might be redirected to an agent in one or more of the following cases:

- The chatbot assistance is limited by design. Example: The chatbot is designed only to provide initial support.

- The chatbot is unable to understand what the end user wants.

- An agent needed to take over the conversation because the chatbot could not resolve the end user’s query.

- The end user requested to be transferred to an agent.

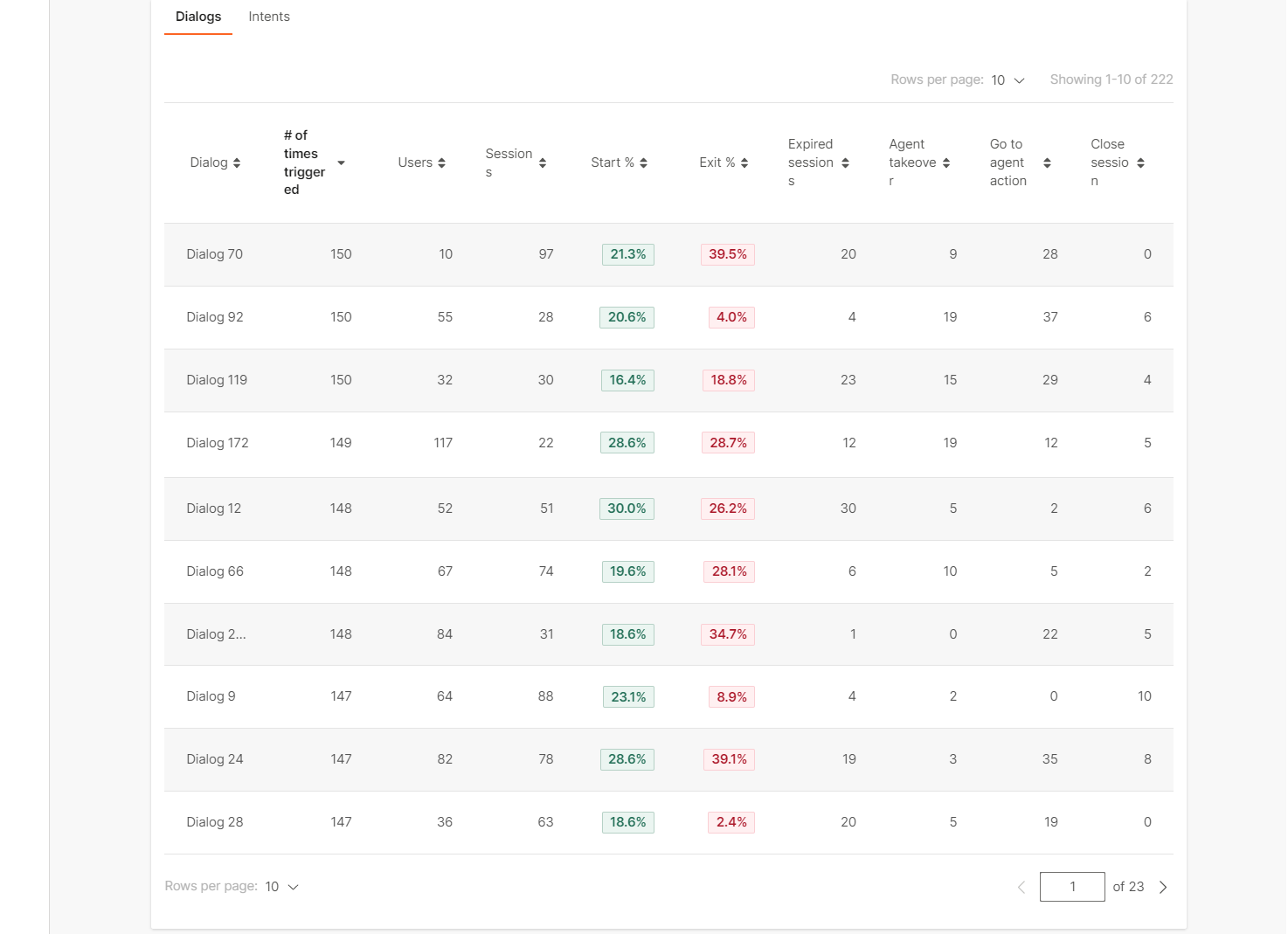

Go to the Dialogs and Intents tab > Dialog and intent insights > Dialog insights section. See which dialogs resulted in either Go to agent action or Agent’s takeover. If these dialogs are not designed to require agent's assistance, it indicates that the dialog or the chatbot needs to be redesigned.

Improve your chatbot

Identify if conversations are ineffective and learn how to fix them.

- How end users start conversations and enter a dialog.

- Are there messages that were not assigned to intents?

- What types of messages could the chatbot not process?

Dialog entry

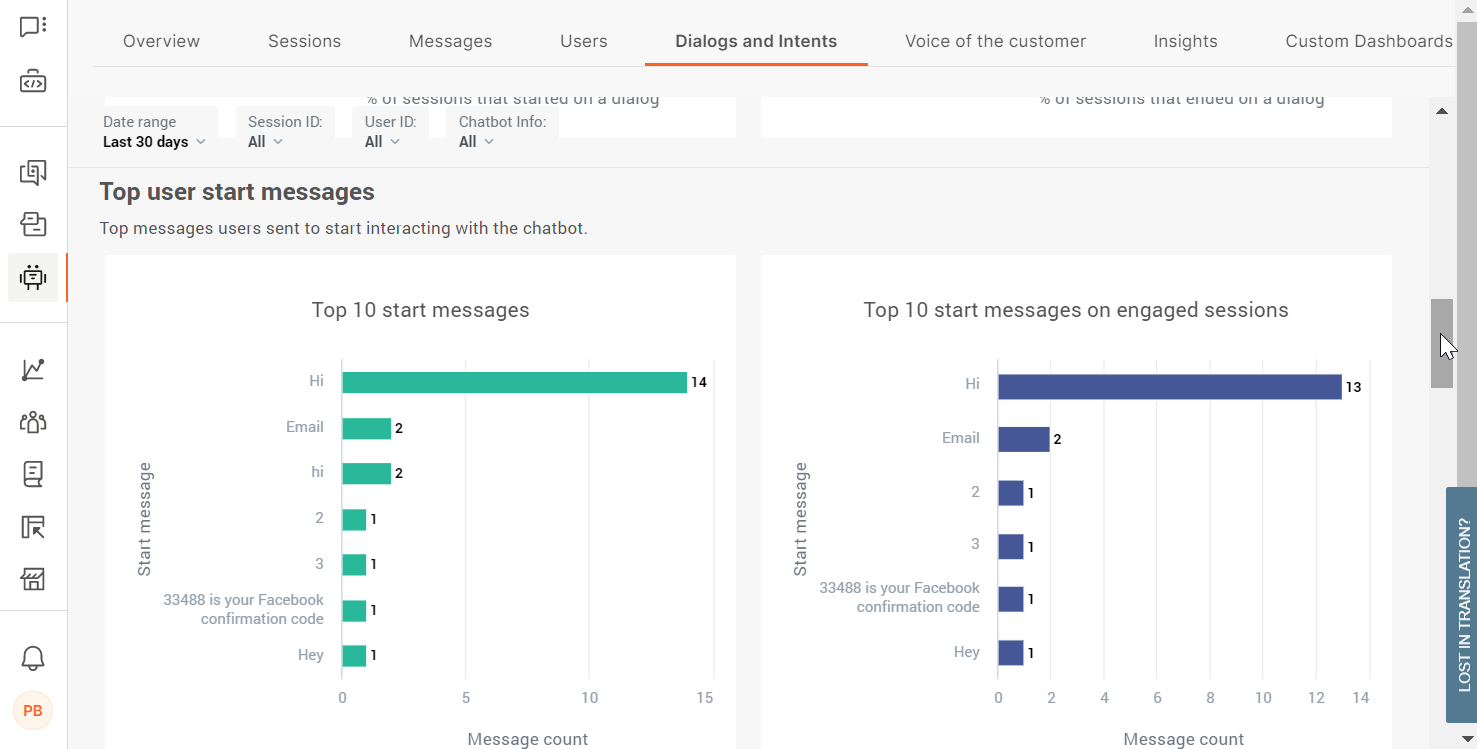

In the Dialogs and intents tab > Top user start messages section, identify the most common messages that end users send as conversation entry points.

Check whether these messages are present in the Not understood user messages and Unhandled user messages sections. If you find that end user start messages are present in these sections, correct these errors.

Not Understood User Messages

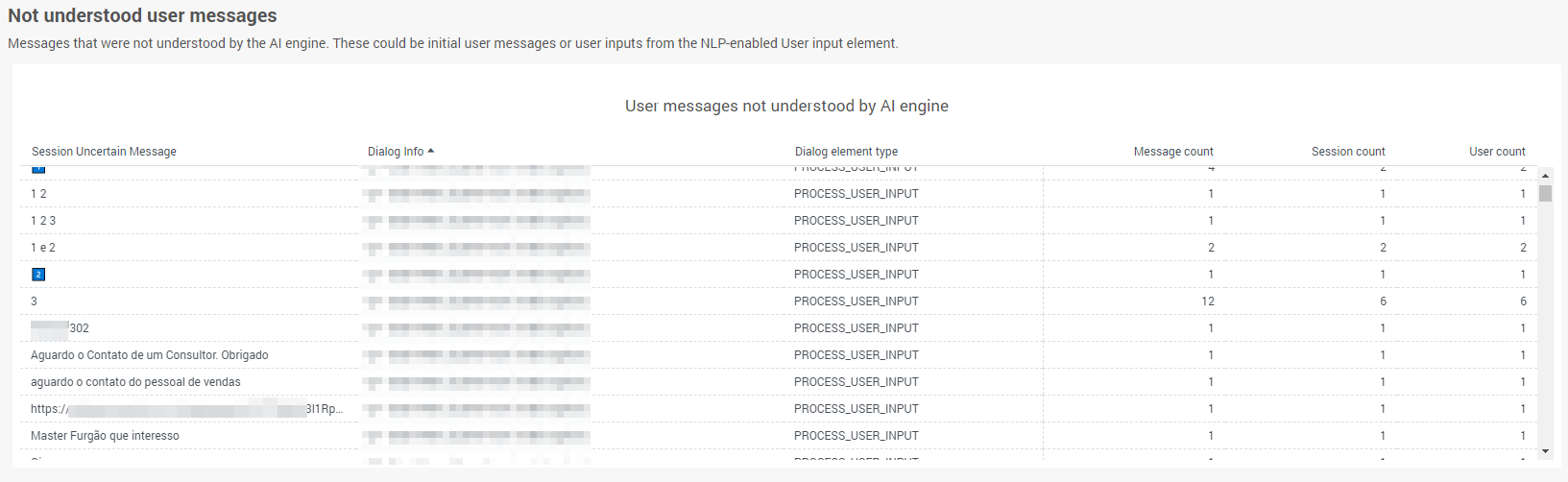

Use the Dialogs and intents tab >Not understood user messages list to identify end user messages for which the chatbot cannot identify an intent.

You can add these messages to the training dataset as applicable, or even create new intents for the messages.

If this list includes any messages that are also present in the Dialogs and intents tab > Top user start messages section, you might need to include relevant messages in the training dataset of your starting intent.

Unhandled user messages

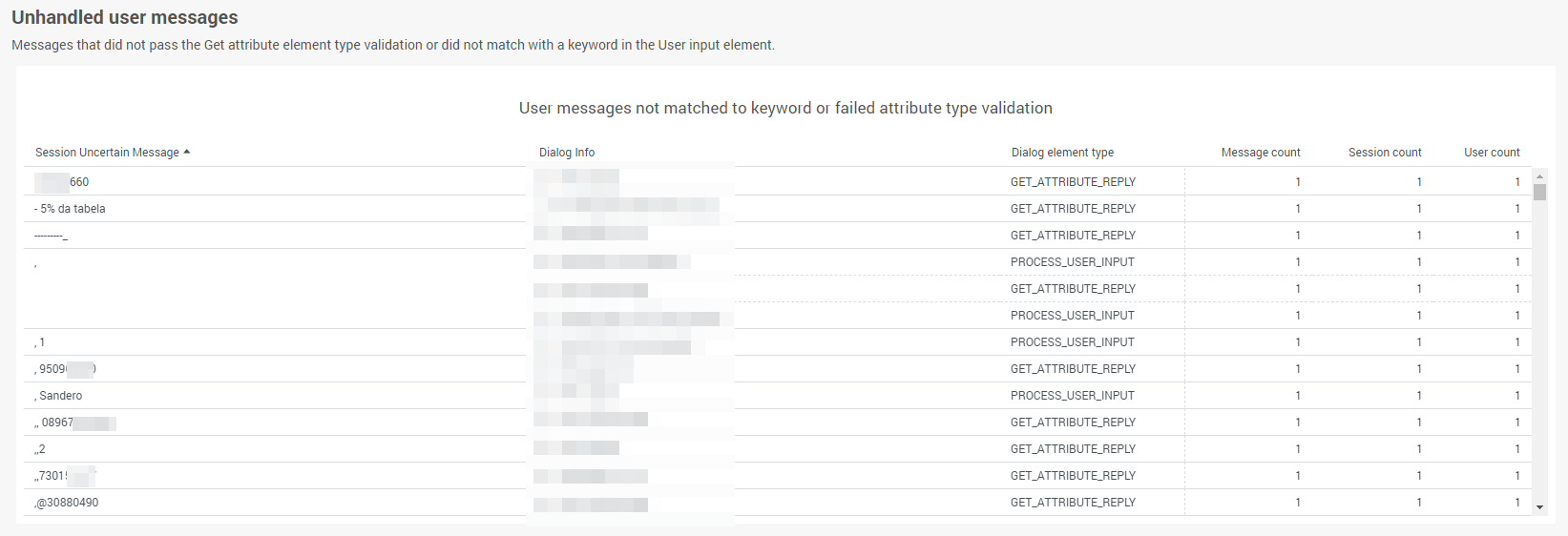

In the Dialogs and intents tab > unhandled user messages section, check user messages that the chatbot was not able to handle. The list gives an overview of where end users do not know how to respond to the chatbot or responded incorrectly.

You might need to add more synonyms to the keyword to help the chatbot understand variants of end user responses.

Train the chatbot

After you analyze the data for your conversations, use your findings to train the chatbot.

- Add missing phrases to intents.

- If necessary, add intents where required to improve the conversational design.

- Add more synonyms to keywords where required.

- Update the User response element to include missing options for end user responses. Example: Add relevant keywords.