Predefined Dashboards

Use these reports to get a detailed insight into how chatbots are performing from various perspectives. This allows you to identify areas on which to refocus optimization efforts.

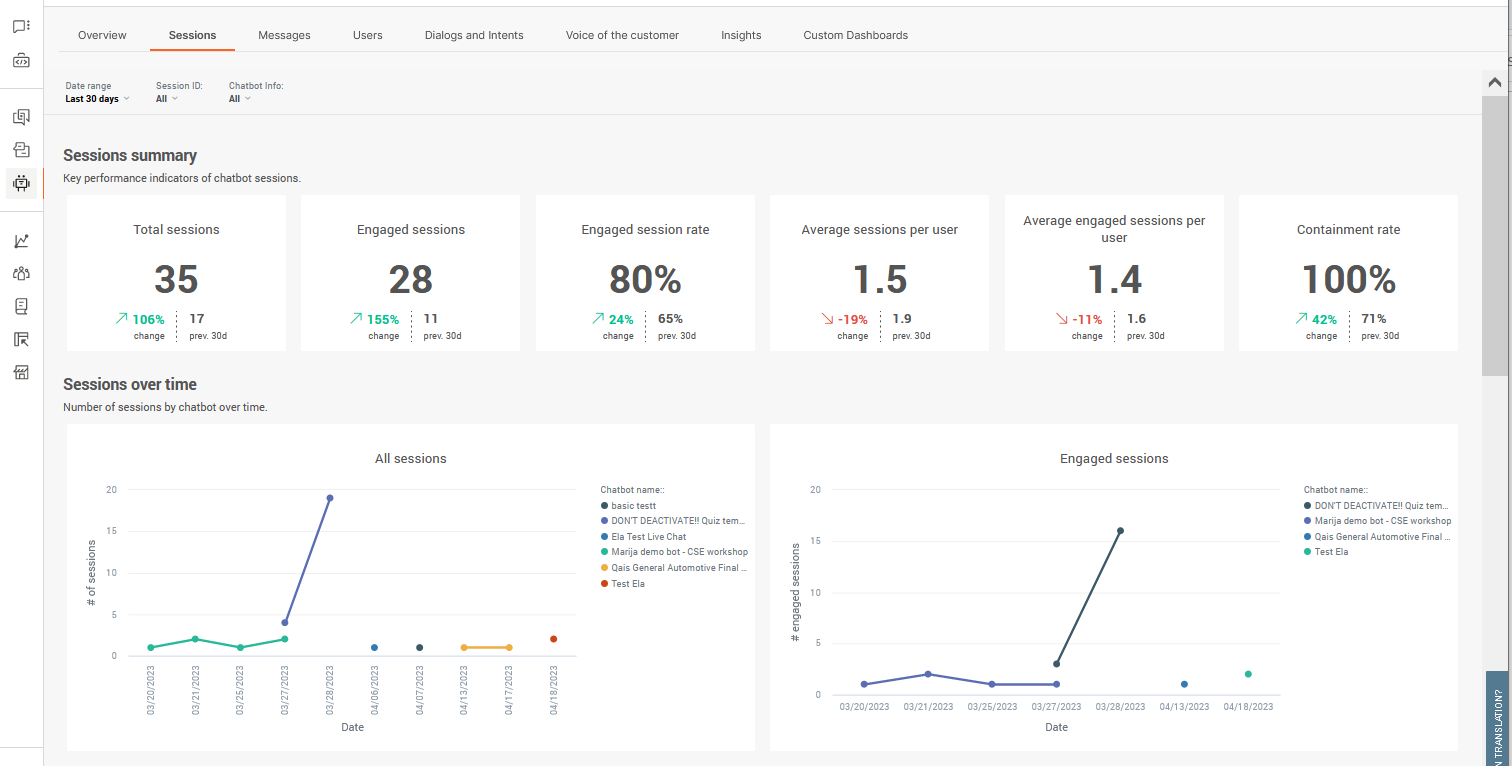

Sessions

Get key performance indicators around chatbot sessions

If sessions are showing short containment rates or early drop-off, this could be a sign that something more fundamental is off with the chatbot rather than a specific part of the customer journey

Sessions refer to the whole conversation from start to end between the chatbot and end user. Even though users can always restart or come back to a chatbot, sessions are always limited to one chat and end when the session is closed, either by initiation or timeout.

Total sessions is the number of opened chat sessions initiated by end users regardless if the chat timed out or was abandoned without any dialog having taken place. The value is the total whole number.

Engaged sessions is the total number of sessions in which end users initiated a session and then sent a minimum of 1 message to the chatbot. The value is a whole number. Ideally this number should be as close to Total sessions as possible.

Engaged session rate is the rate at which end users initiated a session and then sent a minimum of 1 message to the chatbot. The value is the percentage of engaged sessions over total sessions.

Average sessions per user is the average number across all total sessions individual end users initiated chats. The value is the average number of sessions per user to the nearest decimal point (1.0).

Average engaged sessions per user is the average number of sessions in which individual end users initiated a session and then sent a minimum of 1 message to the chatbot. The value is the average number of engaged sessions per user to the nearest decimal point (1.0).

Containment rate is the rate at which sessions are closed without the end user having to close the session without attaining a resolution. Sessions which are passed to support agents are considered contained sessions. The value is the percentage of contained sessions over total sessions.

Sessions through timedisplays the number of sessions through time which lets you identify when chatbot interactions are most active. You can use this to determine when to plan extra human support if and when required. The first graph is for total sessions, the second graph is for engaged sessions. Hover over any points in the graphs for more information. Use the filters at the top of the report to modify the time view.

Session ends shows you how sessions are ending, for example if the chat is expiring due to a lack of user response. Use this chart to identify if chatbots are serving their purposes.

- Expired sessions - user stopped replying to the chatbot and the reply time lapsed

- Agents' takeover - chatbot could not handle the request and the conversation was handed over to Conversations

- To agent- user requested further support or to be transferred to a human

- Close session- the conversation reaches the Close session element and chat ends

The duration will show you average session durations before session ends. Longer and brief durations can indicate ineffective customer journeys. Durations are in 00:00:00 format.

- Session duration - all sessions

- Engaged session duration - engaged only

- Interaction time- time users spend talking to chatbot

Session ends will always amount to total number of sessions – these are the possible end scenarios for each conversation with the chatbot. Depending on the type of chatbot you have, some expiry reasons should be expected as more common than the others. But, for example, if you notice that agent takeover is too high you can add more intents to cover more scenarios (additionally train the bot).

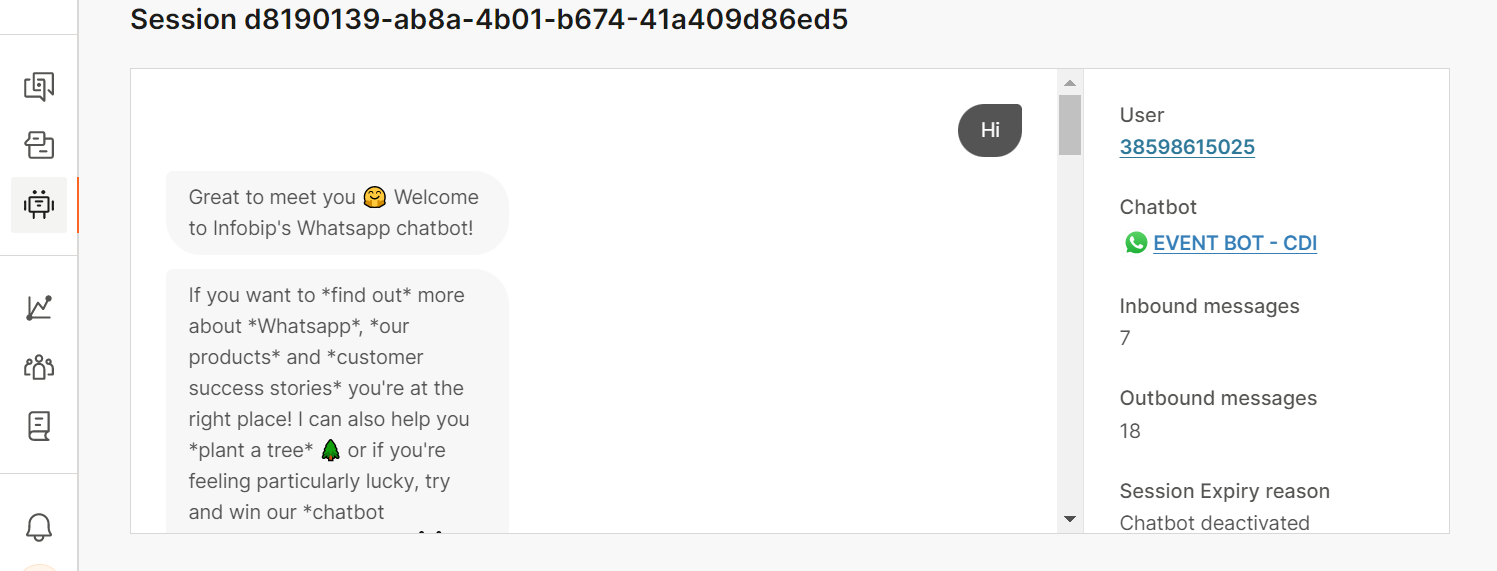

Session details provide details about the chatbot sessions. Use the metric headers to sort the table as required.

- Session ID - Unique identifier for the session

- User ID - Identifier for the end user. The format of the ID depends on the channel. Example: For WhatsApp, the user ID is the phone number

- Session end reason- The reason the session ended. Example: Close_session, Expiry

- Start date - The date on which the end user sent the first message

- Time - The time at which the end user sent the first message

- Engaged session - Whether the end user initiated a session and then sent a minimum of 1 message during the session.

- User messages - The total number of messages sent by the end user during the session

- Chatbot messages- The total number of messages sent by the chatbot during the session

- Duration - How long the session lasted

To drill down into a session, click its Session ID. The session transcript opens in a new window. You can view all the messages that are exchanged between the chatbot and the end user. Use this information to improve the chatbot. Example: You can identify dialogs that end users struggle with, end user messages that the chatbot did not understand, and parts of the session that are not performing as expected.

An overview of the conversation is displayed on the right. You can click the user ID to view information about the user. You can also click the link to the chatbot and make changes, if required.

API gives you an overview of all API calls going through the API element used in chatbots. Here you can identify if calls are executing and returning values as expected, as well as execution times in milliseconds. You'll need to filter the report by individual chatbots to see these.

Depending on the configuration of API calls in the chatbot itself, the endpoint URLs will display either the originalUrl(without resolved attributes in the URL) or resolvedUrl(with resolved attribute in the URL).

For more information on the API calls themselves, click on any of the URL endpoints. For each URL endpoint you can see how many times it was called and some other details.

- Response codes - all available responses a request can have

- Count - how many times the response code was triggered. Useful to see if any of the calls are not successful (and what type of response codes they trigger)

- Average duration- in milliseconds

If you selected a URL endpoint that didn’t display its resolved URLs in the Session analytics, once you click on it, you will be able to see all the resolved URLs for that endpoint. For each Resolved URL, you can check the following information:

- Response code - helps see which HHTP requests are not successfully completed

- Response duration - how long it took for the request to complete in milliseconds

- Timestamp- the exact execution time

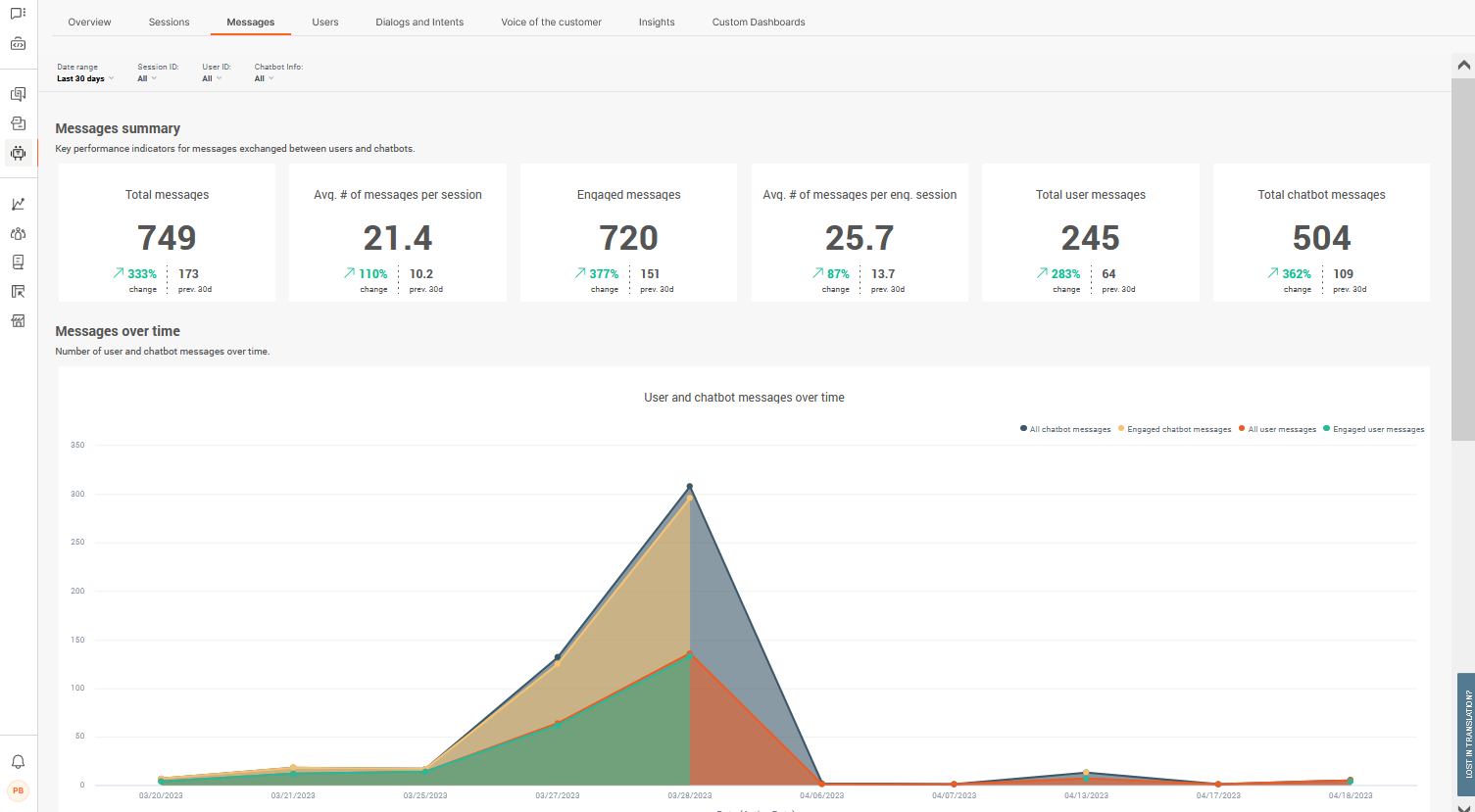

Messages

Get key performance indicators around chatbot messages for both chatbot and user

Check if users are dropping off too quickly or getting the resolutions they need by comparing message totals across types. If engagement numbers are low, or if chatbots are sending many more messages than users, this might be an indication that your chatbot is not providing the best support it could.

Messages are only sent either by the chatbot or by the user, but Answers is able to determine if the user is engaged with the chat and average message exchange across all sessions.

Total messagesis the total number of messages exchanged whether users engaged with the chat or not. This number should be as close to the number of engaged messages as possible to indicate if the chatbot is working efficiently. If this number is much higher than total engaged messages, this can indicate that users are dropping off without resolutions. The value is the total whole number.

Average number of messages per session is the total average number of messages exchanged across all sessions whether users engaged with the chat or not. This number should be as close to the average number of messages per engaged session as possible to indicate if the chatbot is working efficiently. The value is the average number to the nearest decimal point (1.0).

Engaged messages is the total number of messages exchanged as part of an engaged session. The value is a whole number.

An engaged session is one in which an end user initiated a session and then sent a minimum of 1 message to the chatbot.

Average number of messages per engaged session is the total average number of messages exchanged during engaged sessions. The value is the average number to the nearest decimal point (1.0).

Total user messages is the total number of messages received from users, i.e. total number of incoming ⬅ messages. The value is the total whole number.

Total chatbot messages is the total number of chatbot messages sent to users, i.e. total number of outgoing ➡ messages. The value is the total whole number.

Messages through time displays the number of exchanged messages through time which lets you identify when chatbot interactions and message levels are most active. The first graph is for total sessions, the second graph is for engaged sessions. Hover over any points in the graphs for more information. Use the filters at the top of the report to modify the time view.

Engaged message count and averagesprovides you with an overview of engaged message totals for both the chatbot and end user over time, so that you can identify at which moment in time either side is peaking.

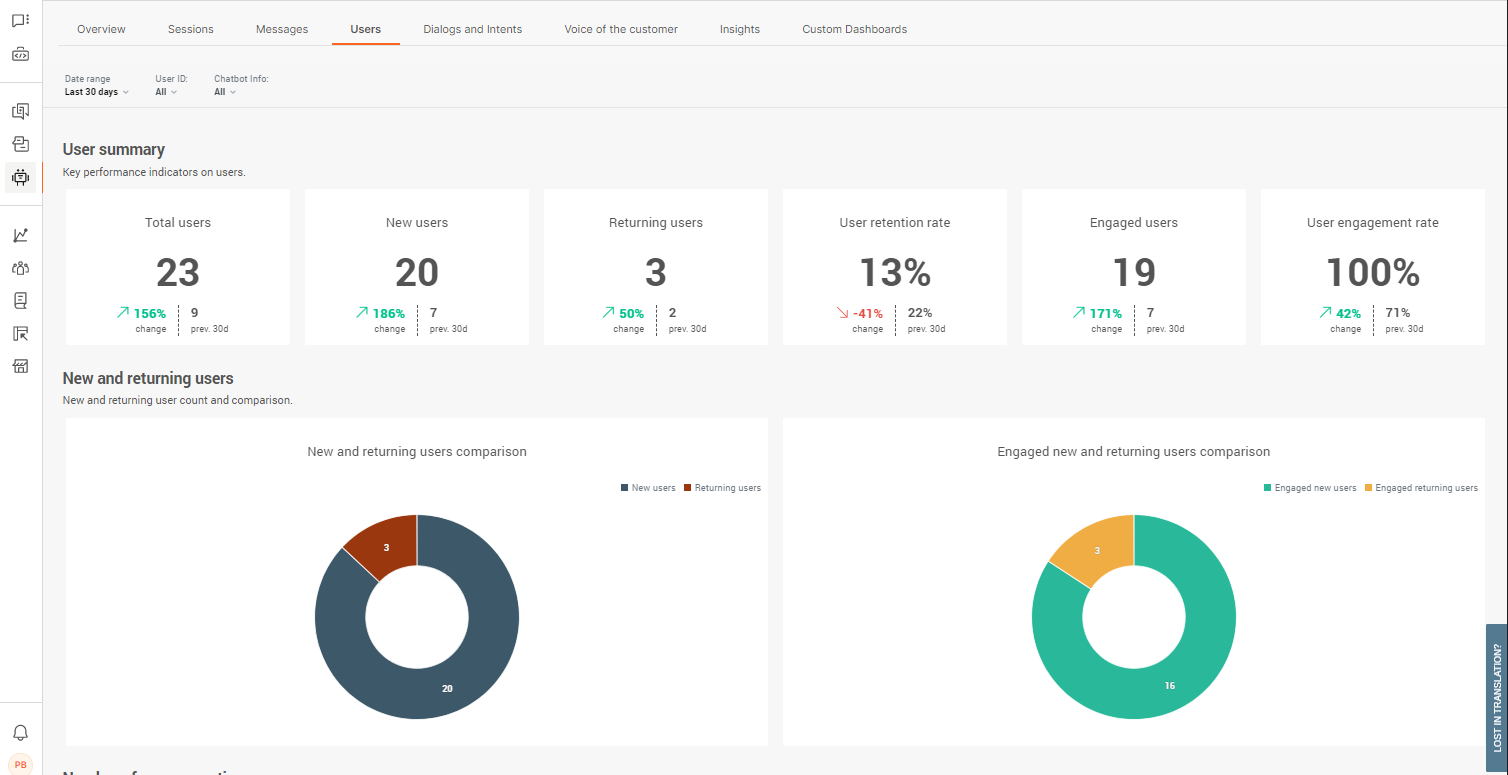

Users

Get key performance indicators around users

Understand how many people are coming back to chatbots for assistance, how many are finding a resolution, and how many are actually engaging with chatbots. Depending on chatbot's purpose (e.g. a chatbot to help with specific tasks), higher returning users could be a sign that it is efficient in helping users with their queries

Users are those at the other end of the chat, the ones who are coming to the chatbot for assistance or queries. Users can be new or returning users and their action alone is to engage with the chatbot to get to their desired resolution. This of course depends on your chatbot efficacy and design quality.

Total usersis the total number of users tracked who have initiated a conversation with a chatbot. This is the total number for all sessions, engaged or not, as well as the total number of users, whether they are new or returning or not. The value is the total whole number.

New users is the total number of new or unique users initiating chats. This works by tracking the user's UUID and checking if there are any matches in chatbot records. If no matches are found, the user is considered a new user. The value is the total whole number.

Returning users is the total number of returning users initiating new chats. This works by tracking the user's UUID and checking if there are any matches in chatbot records. If a match is found, the user is considered to be a returning user. The value is the total whole number.

User retention rate reflects the percentage of users who managed to get to what would be considered a successful resolution or termination of chat, without abandoning the chat or it timing out. A low retention rate is a high indicator that your chatbot could use some optimization.

Engaged users is the total number of end users who initiated a session and then sent a minimum of 1 message to the chatbot.

The value is a whole number.

Ideally, this number should be close to the value of Total users.

User engagement rate reflects the percentage of end users who engage with the chatbot. Chat engagement occurs when an end user initiates a chat and then sends a minimum of 1 message to the chatbot.

New and returning userssee how your new and returning user numbers compared with each other for all sessions, as well as engaged sessions. Depending on your chatbot's purpose, higher numbers of returning users requesting support can be an indicator of them not finding effective resolutions.

User count through timedisplays the number of users initiating chats through time which lets you identify when chatbot interactions and message levels are most active among new and returning users. Hover over any points in the graphs for more information. Use the filters at the top of the report to modify the time view.

User detailsgives you a detailed look into users at a much more granular level. Use the metric headers to sort the display as required.

- User ID - Identifier for the end user. The format of the ID depends on the channel. Example: For WhatsApp, the user ID is the phone number

- Sessions - total number of sessions the user had with the chatbot

- Inbound messages - the total number of messages sent by the user to the chatbot

- User status - whether the user is a new or returning user

To go into further details about individual users, click a user ID and you will also get details about their sessions.

- Total sessions - number of sessions the user has taken part in

- Inbound messages- the total number of messages this user sent to the chatbot

- First time seen - the first time the user chatted with the chatbot (time of user's first message)

- Last time seen- the last time the user actively chatted (time of the user’s last message)

- Average session duration - the average time conversations lasted between this user and the chatbot

- CSAT score - is the score they left you at the end of the chat. You need to have the CSAT element present in chats for this

Click the Session ID to view conversation records. You can scroll through all the messages exchanged between the chatbot and the user and check the overview of the conversation on the right side.

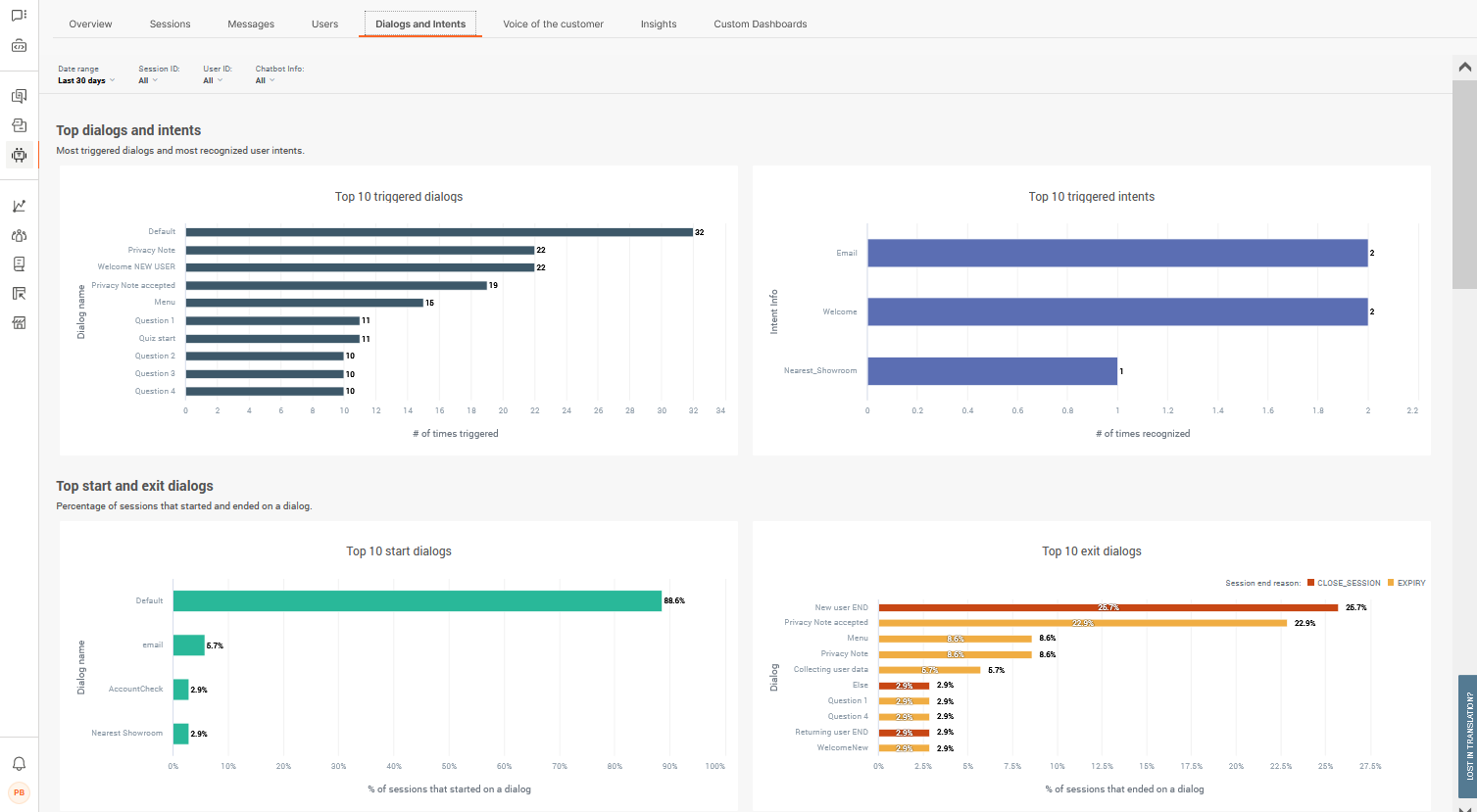

Dialogs and intents

Get key performance indicators around used dialogs and triggered intents

Understand which parts of the customer journey are performing better than others in order to learn about your customer better and their preferred routes of interaction with chatbots. This can be a huge driver in optimization efforts by following parts of logic that are proving more effective when it comes to user interaction

Dialogs are the concrete stages in the customer journey to which users are routed depending on their actions and responses. Intents work in a similar way but work with AI chatbots based on machine learning and intent recognition.

Read the section about what makes up a chatbot for more information about dialogs and intents.

Top dialogs and intentsdisplays the top 10 dialogs and intents which are being triggered the most, along with total numbers. Default dialogs and welcome intents are most likely going to be at the top, followed by where you most often take users next. Make sure to use the filters as required when you need a closer look.

You can also use these analytics to see correlations between top dialogs and intents. Larger differences between the two means that users are getting to dialogs via other ways than the intent it is based on. In line with this, you can check the numbers under the Unrecognized intents and Unhandled messages analytics.

Top start and exit dialogs are the 10 most common places in which chats start with end users. New sessions will likely begin with your default dialog, but can also be initiated elsewhere if returning users, or configured settings are leading to a spot mid-customer journey. Exit dialogs is where sessions are ending and the reasons can be one of the following:

- Expired sessions - user stopped replying to the chatbot and the reply time lapsed

- Agents' takeover - chatbot could not handle the request and the conversation was handed over to Conversations

- To agent- user requested further support or to be transferred to a human

- Close session- the conversation reaches the Close session element and chat ends

Top user start messages records the top 10 most commonly used messages coming in from users which initiate the chat. Totals are recorded for all sessions in the first graph, then separated out to engaged sessions only in the second. Use these to add to your keyword synonym detection and intents if they are not present. Note that these values do not appear as unrecognized inputs in further reports.

The Unknown category indicates messages that do not contain text. Example: The messages contain images or postback.

Dialog insightsgives you a detailed look into dialogs at a much more granular level. Use the metric headers to sort the display as required.

- Times triggered - how many times a dialog has been entered by a user

- Users- the total number of users who entered this dialog

- Sessions - in how many sessions the dialog is present and was triggered

- Start % - percentage of how often this is first dialog at chat initiation

- End %- percentage of how many times this was the last dialog before the session ended

- Expired sessions- the number of dialogs where the user let the session expire

- Close session- the number of times conversation ended naturally at the designed point for closing sessions

- Go to agent action- the number of time the user requested to talk to an agent

- Agent’s takeover - agent took over the conversation from the chatbot

Bear in mind that the number of sessions, users, and times the dialog was triggered will differ due to the fact that the end user might select to enter this dialog several times while talking with the chatbot.

Intent insightsgives you a detailed look into intents at a much more granular level. Use the metric headers to sort the display as required.

- Times recognized - the number of times the chatbot recognized a user’s utterance and forwarded the user to the correct intent

- Users - total number of users routed to the intent

- Sessions - total number of sessions in which the intent was recognized

- Start - total share percentage the intent was the first one

Unhandled messages are messages which did not pass validation either because an Save user response element type could not be matched, or because a keyword could not be matched from User response.

- Message- the specific message where the chatbot is having problems

- Dialog - name of the dialog where it happened

- Element type - which element didn’t recognize the incoming message (either User response or Save user response)

- Message count - how many times this particular message was received

- Session count - how many sessions this happened in

- User count - the total number of users who sent the message

Use these analytics to tweak parts of the configuration where users, as well as the chatbot, may be having issues in providing a correct response. This could be anything from informal language being used, like slang, to an attribute type incorrectly set up.

Not understood messages tracks all unrecognized intent messages users are sending which the chatbot doesn't recognize, meaning that the chatbot could not assign them to any intent. These insights are crucial in optimization efforts around chatbot training as it gives you a clear insight into user behavior and what kind of responses to expect, and the existing gaps in chatbot configuration.

- Message- the specific message where the chatbot is having problems

- Dialog - name of the dialog where it happened

- Element type - which element didn’t recognize the incoming message (either User response or Save user response)

- Message count - how many times this particular message was received

- Session count - how many sessions this happened in

- User count - the total number of users who sent the message

Be aware that the start messages do not appear under unrecognized intents and unhandled messages.

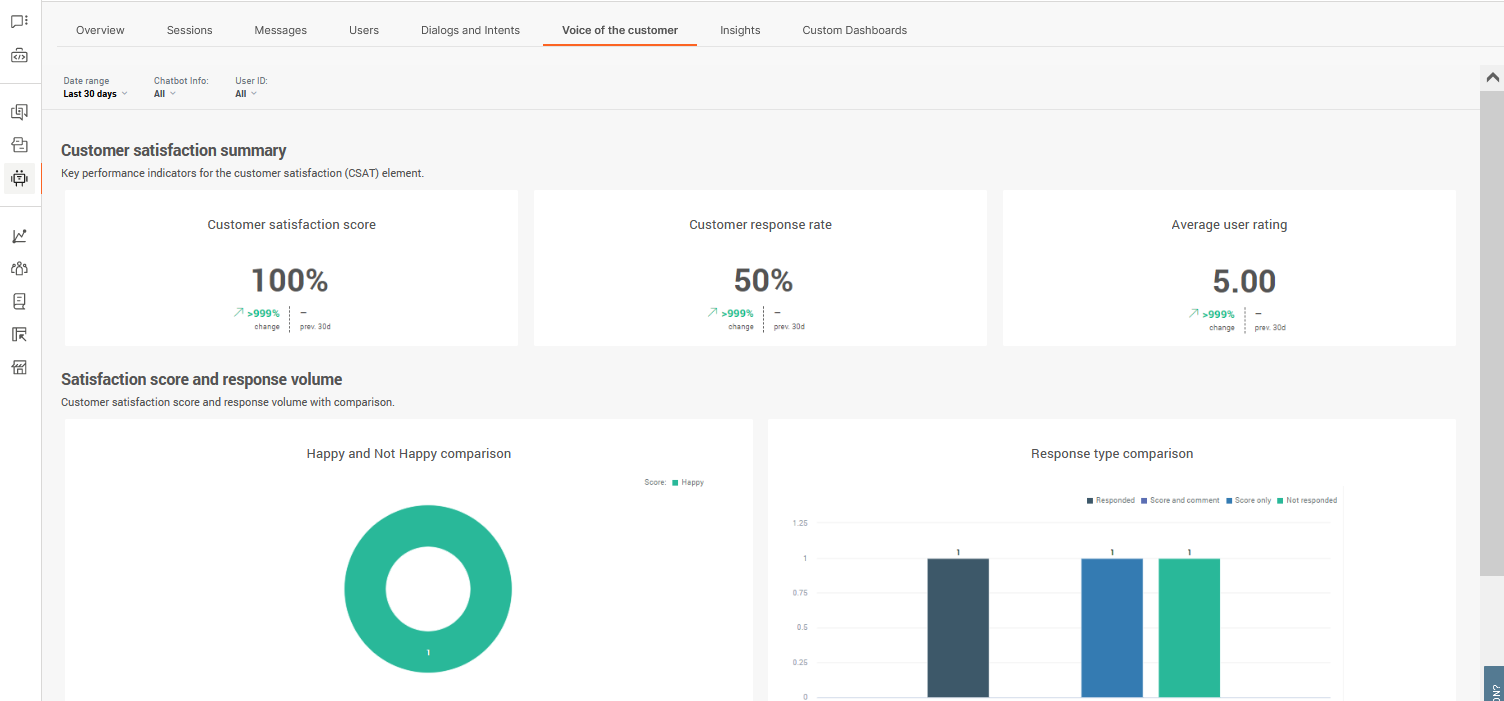

Voice of the customer

Get key performance indicators around customer satisfaction coming from the CSAT element

As well as getting information from the other predefined dashboards to find key indicators, use customer satisfaction feedback to gather data directly from the users themselves. Allowing users to engage with a CSAT survey is the quickest route to finding out about the level of service you provide through your chatbot

In order for Answers to be able to gather customer satisfaction statistics, you need to use the CSAT channel element wherever possible to collect information from users. This isn't automatically done so make sure to add it at the end of customer journeys.

Score is the total average score across all submissions as well as the change rate over the filtered date range. Scores are usually only submitted by engaged users as they will have needed to conclude the chat.

Response rateis the total percentage of users who made it to the CSAT stage (dialog which contains the CSAT element) in your customer journey who submitted a response. If your score is low and the response rate is high, this is a much stronger indicator that the chatbot could use some optimization.

Average ratingis the average score you have received across all submitted scores. Note that scores can be set up differently for the CSAT element so it may not always reflect an accurate score if multiple chatbots are using different scoring logic.

Score and response volumeanalytics indicate whether your users are happy or not happy with the service they received using your chatbot. Happy/not happy logic is based on the score they provide, and Answers follows a standard logic when considering the score. Read about the CSAT element for more information. Response type comparison can be used to see which users are providing useful feedback by leaving comments vs those who are not.

Satisfaction details gives you a further look into feedback at a much more granular level. Use the metric headers to sort the display as required.

- Session ID - session universally unique identifier (UUID)

- User ID - Identifier for the end user. The format of the ID depends on the channel. Example: For WhatsApp, the user ID is the phone number

- Comment - the comment left with the score submission. This is empty is none

- Date & time - this is when feedback was submitted

- Score - happy/not happy based on the numeric score submitted

- Rating- is the numeric score submitted

Use this information to improve your chatbot after it has been running and interacting for a while. Negative feedback and comments are quick wins for fixing any levels of support your chatbot might be lacking.