AI Insights

AI analytics compress large sets of conversations between your chatbot and end users into actionable insights. These insights can help you build and enhance high quality AI chatbots.

Use these insights to do the following:

- Understand how end users interact with your chatbot

- Improve CSAT score

- Improve user conversion rate

- Improve conversational experience

- Enhance your chatbot with additional use cases based on real-life demands from end users

- Retrain your chatbot

Insight Types

You can obtain the following types of insights:

In the AI Insights graphs, the term 'labels' refers to intents.

AI Analytics Dashboard

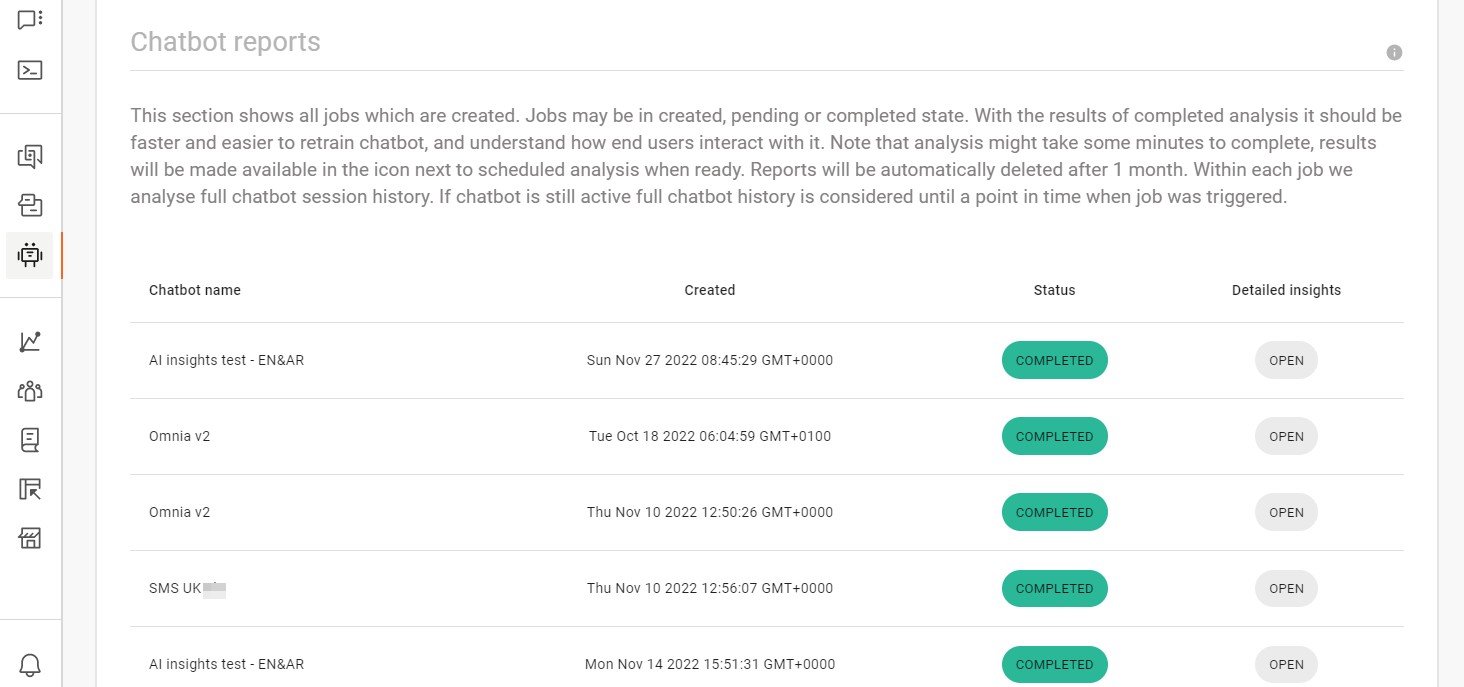

This is the default view for AI Insights. In this view, you can do the following:

- Create a new analytics job for a chatbot

- View a list of analytics jobs for your chatbots. Each analytics job is for a single chatbot

- View the following information for each analytics job:

- Name: Name of the chatbot

- Created at: Time when the job was created

- Status: Status of the analysis job. The status can be one of the following:

- Preparing: This status is displayed immediately after you click Start Analysis for an analytics job. At this stage, the data for the analysis is prepared.

- Created: The analytics job has been created but the analysis has not yet started. The analysis will start when resources are free.

- Pending: The analysis has started.

- Completed. The analysis is complete.

- Error: There was an error during the analysis. So, the analysis was stopped.

- No data: The chatbot does not contain data to start the analysis for any of the insight types. If there is data for even one of the insight types and the criteria are met for that insight type, the analysis is carried out.

When the status of the analytics job changes, the table is automatically updated.

- Detailed Insights: For Completed analytics jobs, click Open to view the results of the analysis.

Create an Analysis Job

You need to create only one analytics job to obtain the results for all the insight types for a chatbot.

Prerequisites

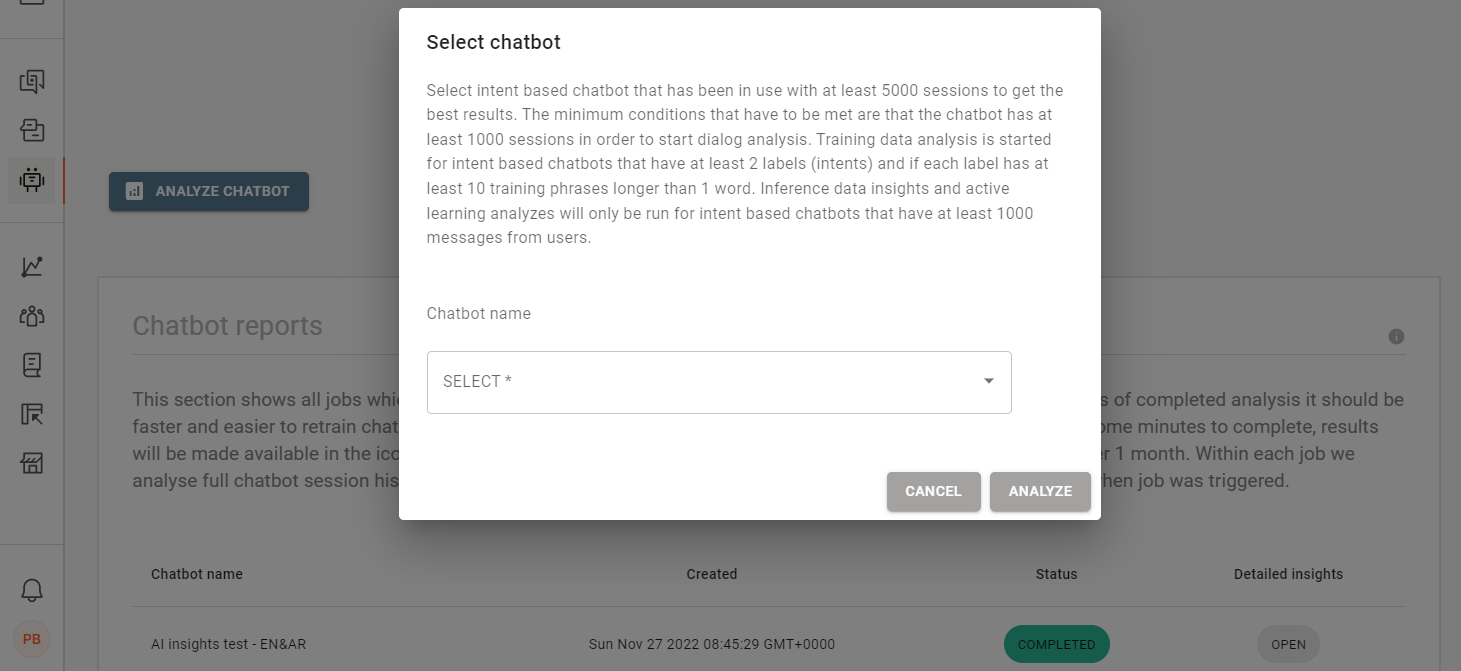

- The chatbot must be intent based. For Keyword chatbots, only Dialog Analysis is applicable.

- You can run the analysis for both Active and Inactive chatbots. However, the chatbot must have been active for some time and must have at least one active session.

- For all analyses except Training Data Insights, the chatbot must have a session history of at least 1,000 sessions. Each session must have at least 2 dialogs. For the best results, the chatbot must have 5,000 sessions.

- For Training Data Insights analysis, the chatbot must have at least 2 intents. Each intent must have at least 10 training phrases that are longer than 1 word.

- For Data Insights and Active Learning analyses, the chatbot must have at least 1,000 messages from end users.

- You can run analytics only for the entire session history.

- Your chatbot must use a language in which words are separated by a space. Example: English.

You cannot obtain AI insights if the chatbot uses a language in which words are not separated by a space. Example: Chinese, Japanese, Thai, and Lao.

Process

To create a new analysis job, follow these steps:

- On the Infobip web interface (opens in a new tab), navigate to Answers > AI Insights.

- Click Create New Analysis.

- In the dialog that opens, choose the chatbot that you want to analyze.

- Click Analyze to start the analysis. Otherwise, click Cancel.

When the status shows Completed, click Open against the analytics job to view the results.

The analysis is performed on all data from the time the chatbot was activated to the time the analysis was started. If you want the analysis to include data for a period after the analysis was started, you must run a new analysis.

The analysis might take several minutes to complete.

The results are automatically deleted after 1 month.

Dialog Analysis

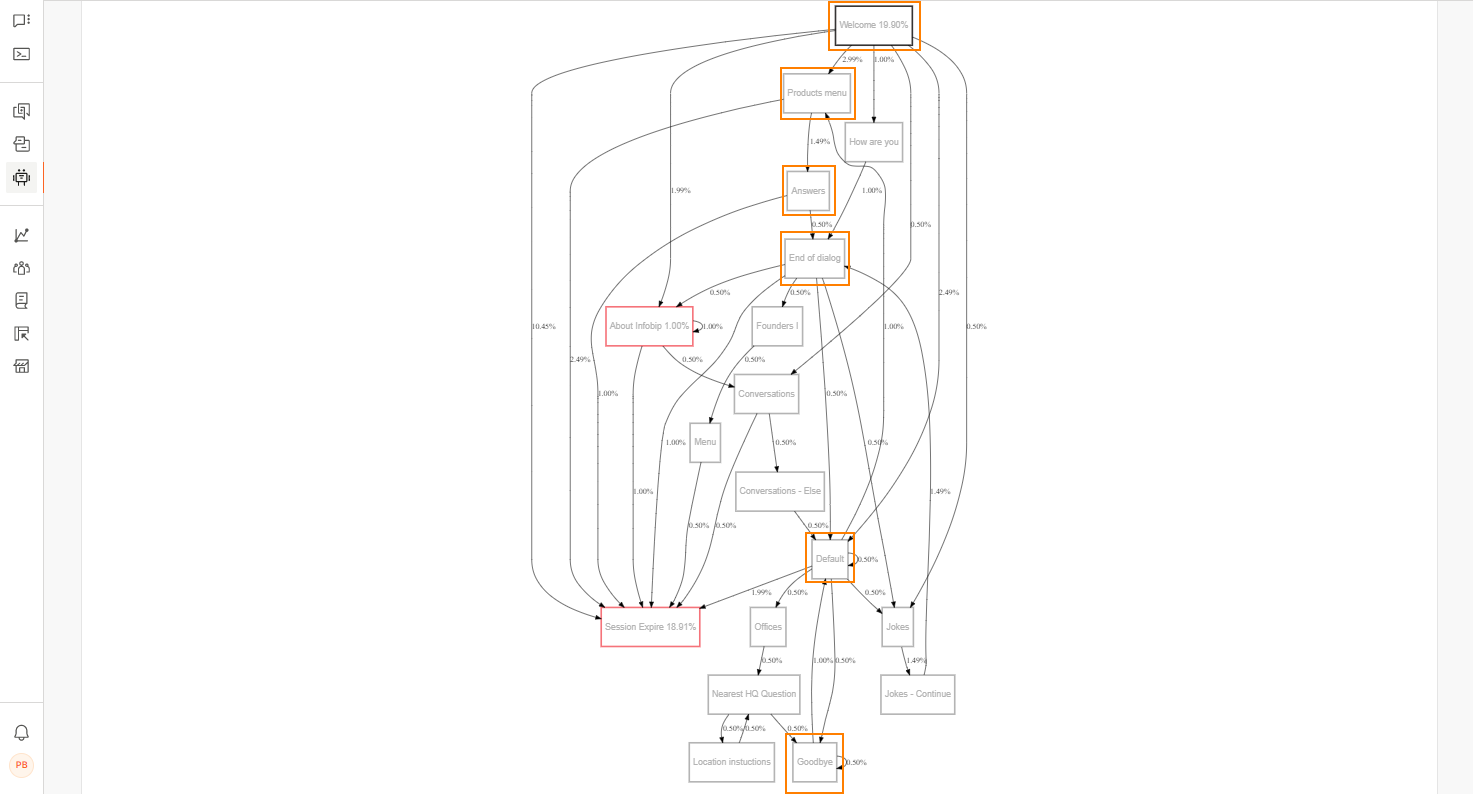

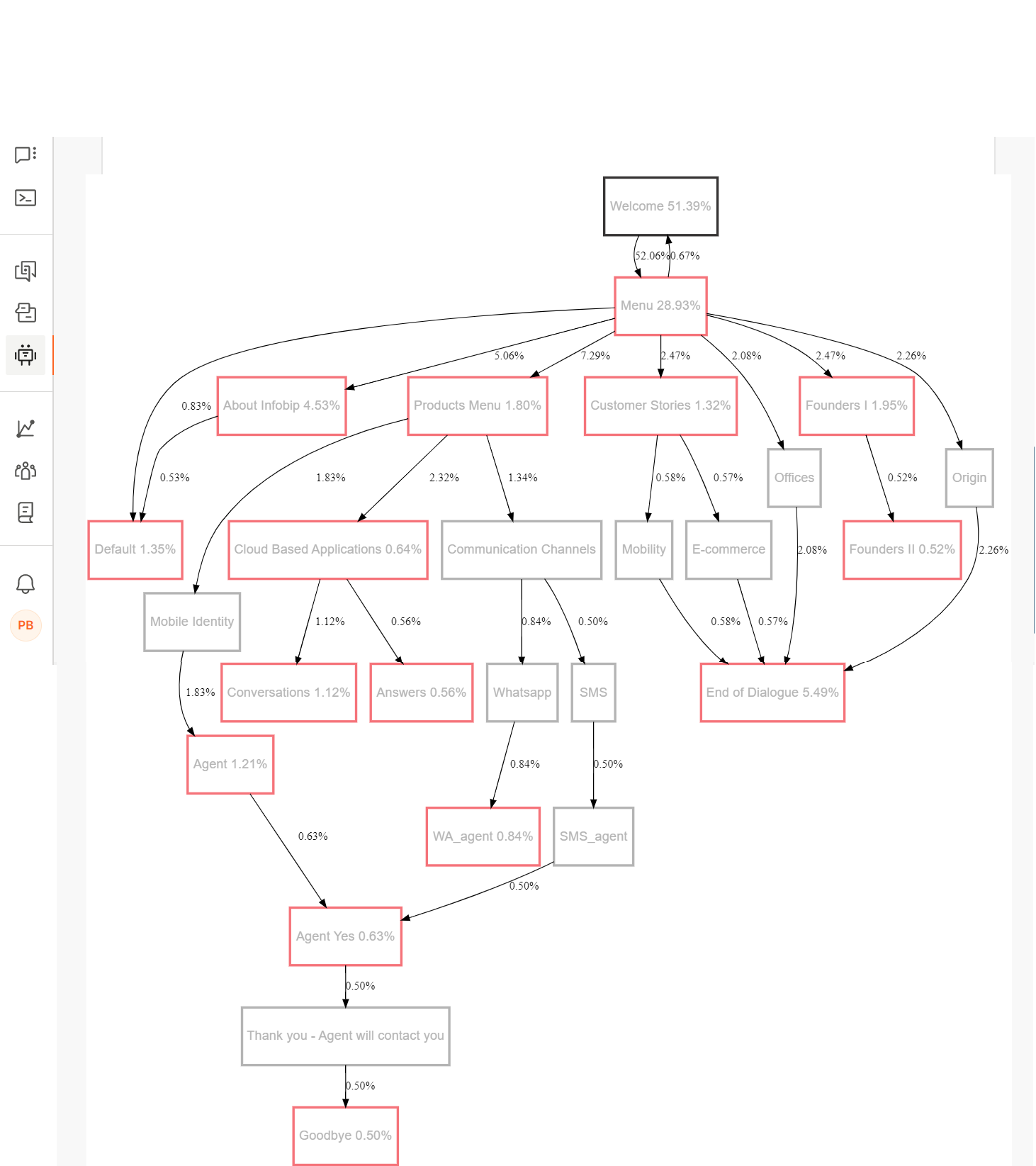

For chatbots that have large dialog trees, there may be thousands of dialog paths across sessions. A dialog path is the set of dialogs accessed by an end user during their interaction with the chatbot. The dialog path starts from the first dialog accessed by the end user to the dialog at which they exit the session. Example: In the following image, one of the dialog paths is Welcome > Products menu > Answers > End of dialog > Default > Goodbye.

It would not be possible to visualize or analyze all the dialog paths for a chatbot. Dialog Analysis evaluates all the sessions for a chatbot and identifies the 20 most used unique dialog paths for further analysis. In most cases, these 20 dialog paths represent more than 50% of all the chatbot's sessions.

Dialog analysis helps you understand the following:

- Understand how end users interact with the chatbot

- Identify where end users quit their journey

- Identify the conversion rate

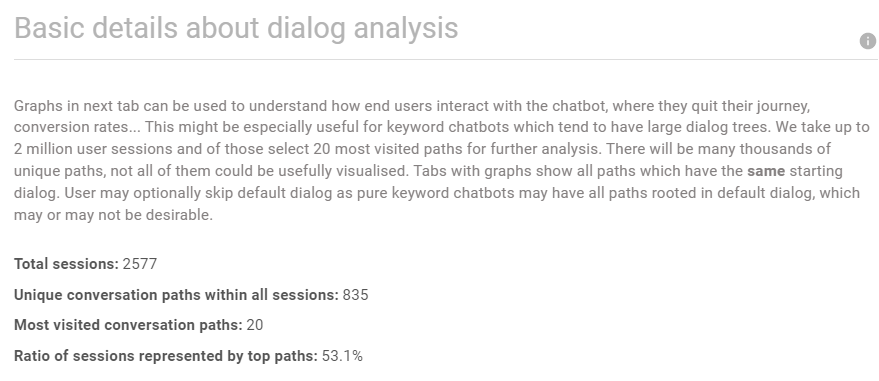

Dialog Details

In this tab, you can view the following information:

- Total sessions: The total number of sessions for which the analysis was performed. This is the total number of sessions from the time the chatbot was activated to the time the analysis was started

- Unique conversation paths within all sessions: The total number of unique dialog paths across all the sessions

- Most visited conversation paths: The number of the most used unique dialog paths

- Ratio of sessions represented by top paths: Percentage of sessions that are represented by the top 20 unique dialog paths.

In the following example, the analysis was performed for 2,577 sessions. There are 835 unique dialog paths across these sessions. Of the 835 dialog paths, 20 dialog paths are used most frequently. 53.1% of the sessions contain one or more of these 20 dialog paths.

Top 20 Most Visited Paths

You can view the following information for each of the top 20 dialog paths:

- Percentage of sessions in which end users enter the starting dialog path

- Percentage of sessions in which end users move from one dialog to the next dialog

- Percentage of sessions in which end users exit the session during a dialog

All the percentages are based on the total number of sessions that were used for the analysis.

The top 20 dialog paths are grouped by starting dialog and each of these groups is displayed in a separate tab. Example: Of the top 20 dialog paths, all dialog paths that start with the Welcome dialog are grouped together in the Welcome tab.

These tabs are sorted in descending order of frequency. In the following example, Welcome is the most used starting dialog, Default is the second most used starting dialog, and so on.

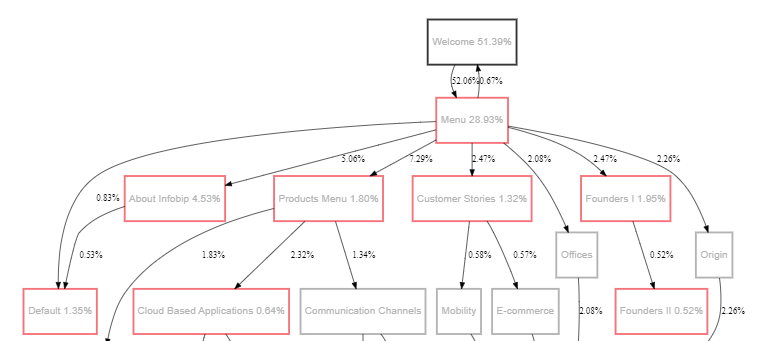

Each tab contains a graph that shows all the dialog paths that have the same starting dialog.

The nodes that have a red outline are terminal nodes and indicate dialogs at which end users exited the session. Example: In the following image, end users exited the session during the Menu, Products Menu, and Customer Stories dialogs.

You can interpret the graph as follows:

- The percentage next to the name of the starting dialog shows the percentage of sessions in which end users entered the starting dialog.

- In a terminal node, the percentage next to the name of the dialog indicates the percentage of sessions in which end users exited the chatbot session at that dialog.

- The percentage next to a node connector shows the percentage of sessions in which end users moved from one dialog to the next one.

Example: In the following image:

- 51.39% of all sessions started with the Welcome dialog.

- 52.06% of all sessions moved from the Welcome dialog to the Menu dialog.

- At the Menu dialog:

- 0.67% of all sessions returned to the Welcome dialog.

- 28.93% of all sessions exited the session.

- Other sessions moved to the next dialog:

- 0.83% of all sessions moved to the Default dialog.

- 5.06% of all sessions moved to the About Infobip dialog.

- 7.29% of all sessions moved to the Products Menu dialog.

- 2.47% of all sessions moved to the Customer Stories dialog.

- 2.08% of all sessions moved to the Offices dialog.

- 2.47% of all sessions moved to the Founders dialog.

- 2.26% of all sessions moved to the Origin dialog.

If a dialog has a high percentage of end users exiting the session, but you do not expect or want end users to exit at this dialog, you may need to investigate the dialog. Probable causes are that the dialog is too long, is or confusing, or does not have the information that the end users require.

Training Data Insights

AI chatbots use training datasets to improve conversations with end users and to resolve end users' requests. The training dataset for AI chatbots consists of intents and their associated training phrases. Training Data Insights evaluates the quality of these training datasets and identifies corrective actions.

The analysis does the following:

- Shows how well intents are performing and highlights underperforming intents.

- Identifies problematic phrases and suggests how to correct or improve intents.

Training Dataset Visualization

The Training Dataset Visualization groups training phrases into clusters.

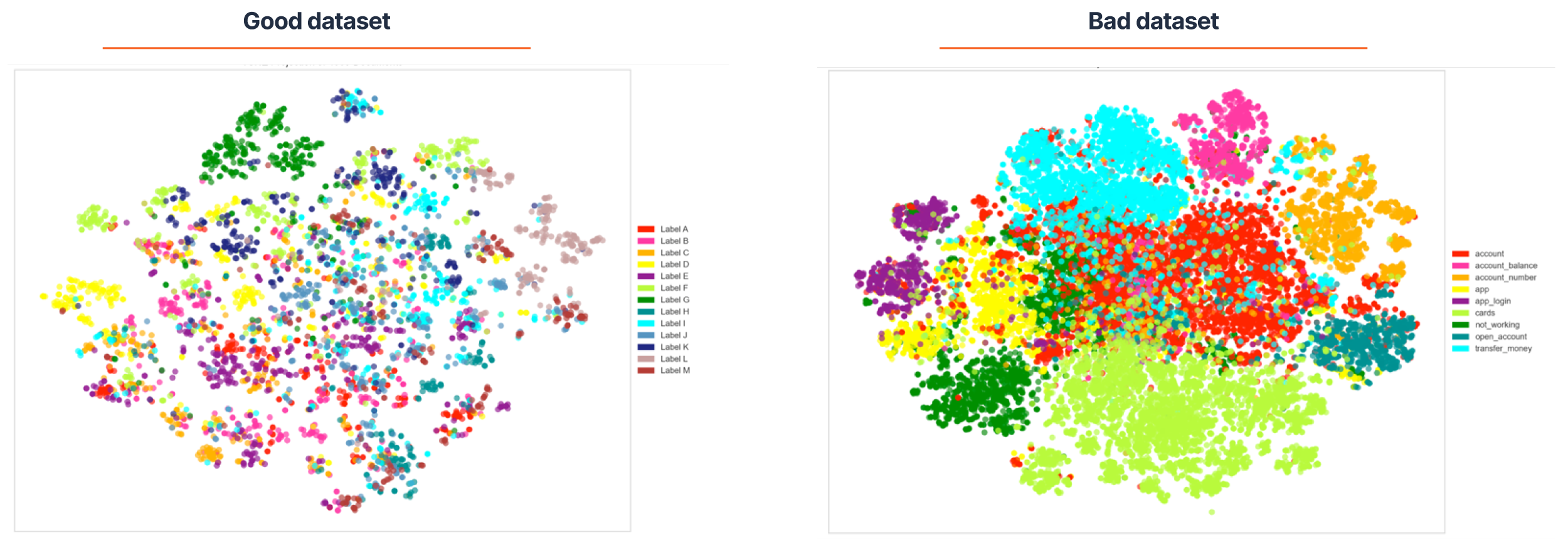

In the graph, each dot represents a training phrase, and each color represents an intent. If the training dataset is good, the dots (training phrases) are grouped into clusters of the same color.

If dots of different colors overlap, it indicates that the intents for these training phrases overlap. Example: In the following image, there is a red dot among the green dots. This indicates that in the chatbot, the training phrase was used for the red intent, but the analysis has identified that other similar training phrases belongs to the green intent.

The lower the number of dots of different colors overlapping one another, the higher the probability for the chatbot to recognize the intent from the end user's message.

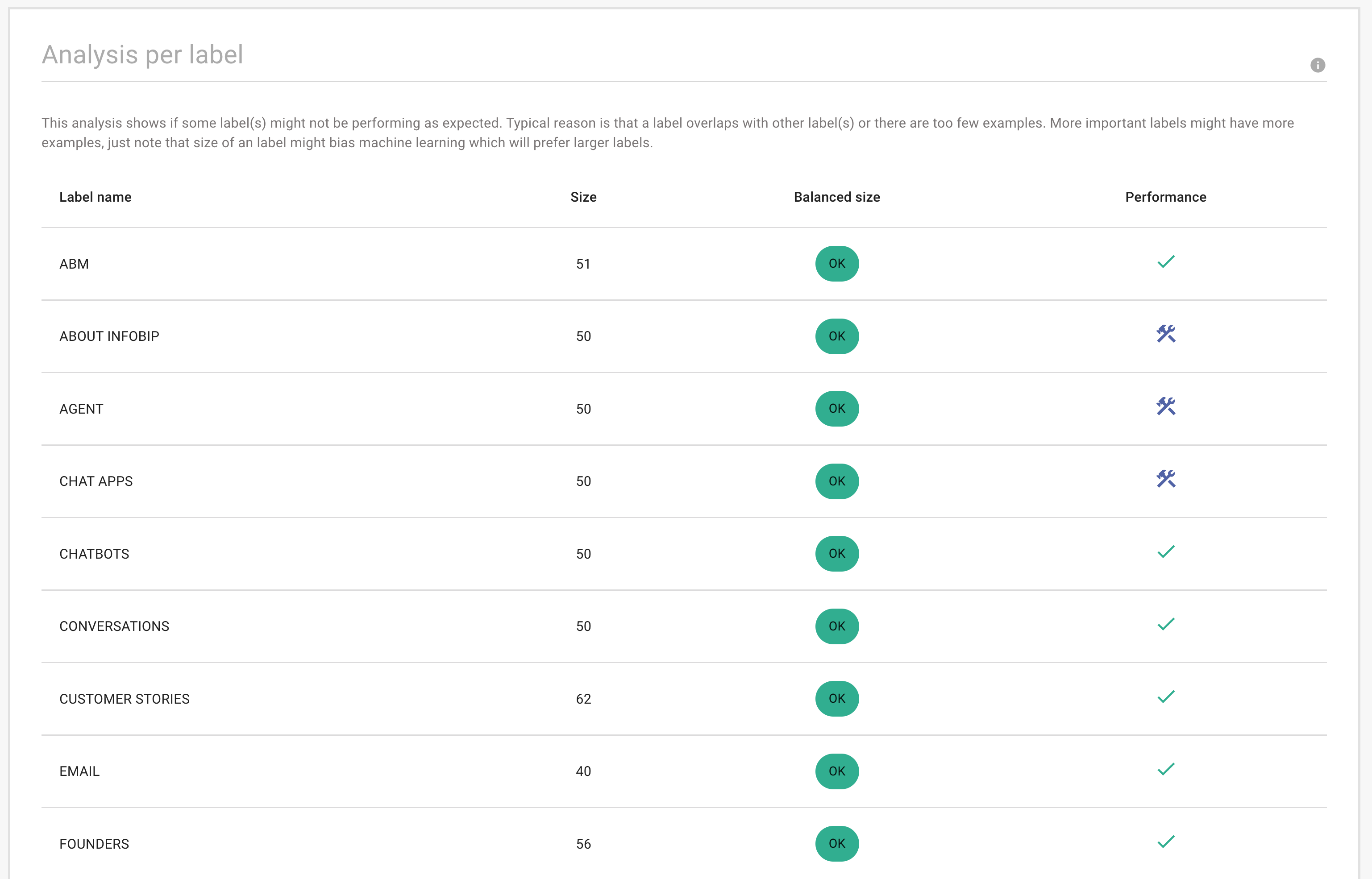

Analysis per Label

This analysis shows how well intents are performing and highlights underperforming intents for corrective action to be taken.

The table in the Analysis per Label section shows the following information:

- Label name: Name of the intent

- Size: Number of training phrases for the intent

- Balanced size: Indicates whether the number of training phrases are enough for machine learning

- Performance: Indicates whether the intent is performing well or needs improvement. The Tools icon indicates that the intent needs to be improved.

Probable causes for intents not performing well are:

- Intent overlaps with other intents. Check whether the training phrases are unique and whether the training phrases for this intent overlap with the training phrases for other intents.

- Insufficient training phrases. You may need to add more training phrases to your important intents. It is easier to train your chatbots if your intents have a large number of training phrases.

Data Insights

Data insights can help you improve your chatbot’s performance and end users' conversational experience. The analysis uses real-life end user data, which is optimal for retraining your chatbot.

The analysis provides the following information:

- Language distribution among all messages - Other than the language set for the chatbot, which languages are most used by end users?

- Intent distribution - What are the most frequent queries from end users? Can intents that are rarely used be removed from the chatbot?

- Ratio of messages with at least oneunknown word- Is the chatbot trained well?

- Entities distribution across intents- Does the chatbot use NER attributes to its full potential or do end users need to repeat themselves multiple times? Which entities does the chatbot need?

- Long messages- Are there any messages that are not primarily designed to be handled by the chatbot and need to be investigated separately?

All the graphs are interactive.

- You can hover over a section of a graph to view the details.

- You can click a section of a graph to highlight it,

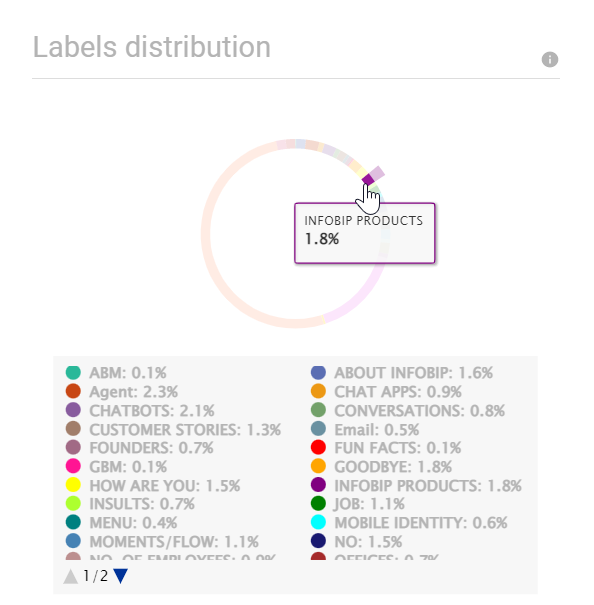

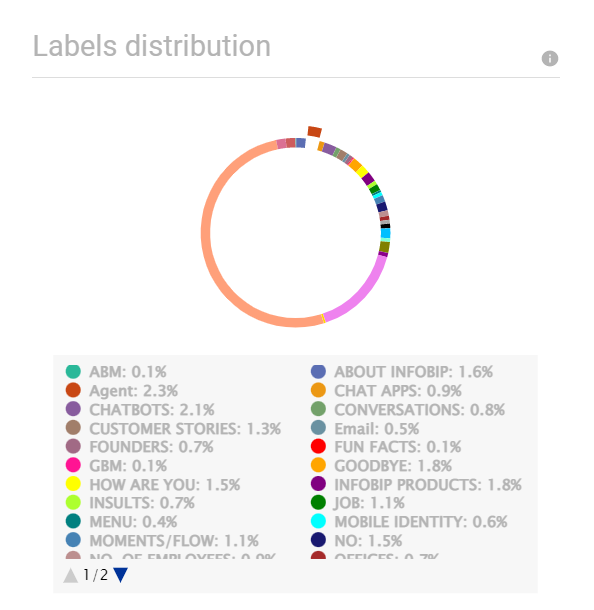

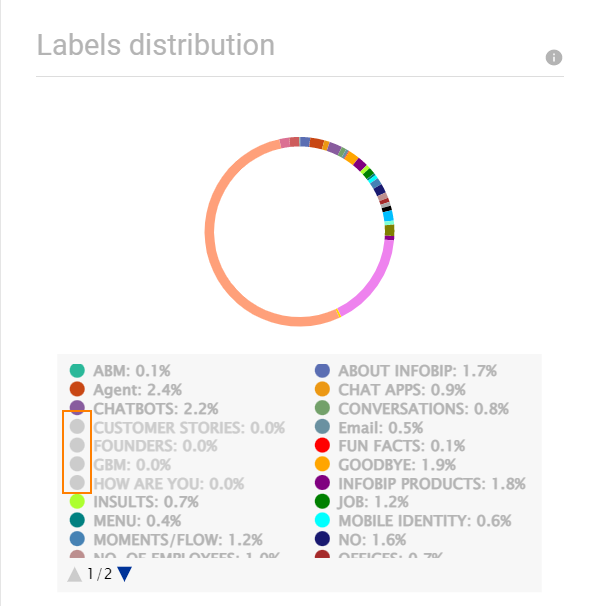

- In the legend below the graphs, you can click the dot to add or remove an item to the graph. Example: In the following image, the labels that are outlined are excluded from the graph.

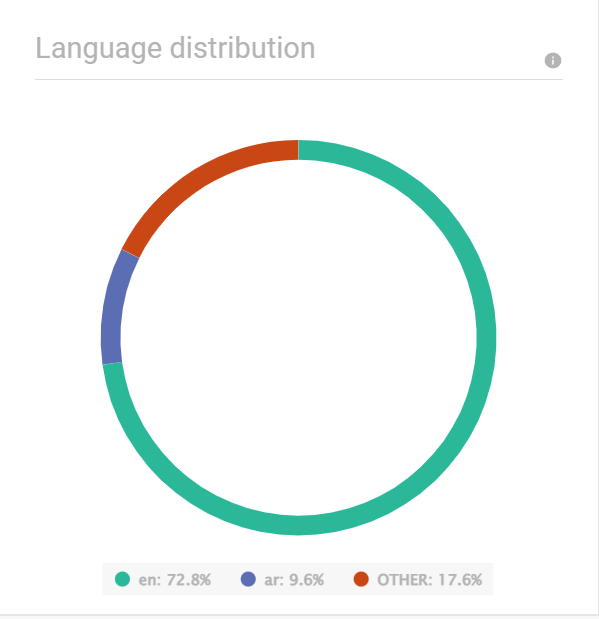

Language Distribution

The Language Distribution graph shows the distribution of languages across messages sent by end users. The analysis recognizes the following languages:

af, als, am, an, ar, arz, as, ast, av, az, azb, ba, bar, bcl, be, bg, bh, bn, bo, bpy, br, bs, bxr, ca, cbk, ce, ceb, ckb, co, cs, cv, cy, da, de, diq, dsb, dty, dv, el, eml, en, eo, es, et, eu, fa, fi, fr, frr, fy, ga, gd, gl, gn, gom, gu, gv, he, hi, hif, hr, hsb, ht, hu, hy, ia, id, ie, ilo, io, is, it, ja, jbo, jv, ka, kk, km, kn, ko, krc, ku, kv, kw, ky, la, lb, lez, li, lmo, lo, lrc, lt, lv, mai, mg, mhr, min, mk, ml, mn, mr, mrj, ms, mt, mwl, my, myv, mzn, nah, nap, nds, ne, new, nl, nn, no, oc, or, os, pa, pam, pfl, pl, pms, pnb, ps, pt, qu, rm, ro, ru, rue, sa, sah, sc, scn, sco, sd, sh, si, sk, sl, so, sq, sr, su, sv, sw, ta, te, tg, th, tk, tl, tr, tt, tyv, ug, uk, ur, uz, vec, vep, vi, vls, vo, wa, war, wuu, xal, xmf, yi, yo, yue, zh

If the chatbot language is different from the most represented language, you can modify the chatbot to improve its performance.

In the graph, all languages that have 5% or fewer messages are grouped together as Other.

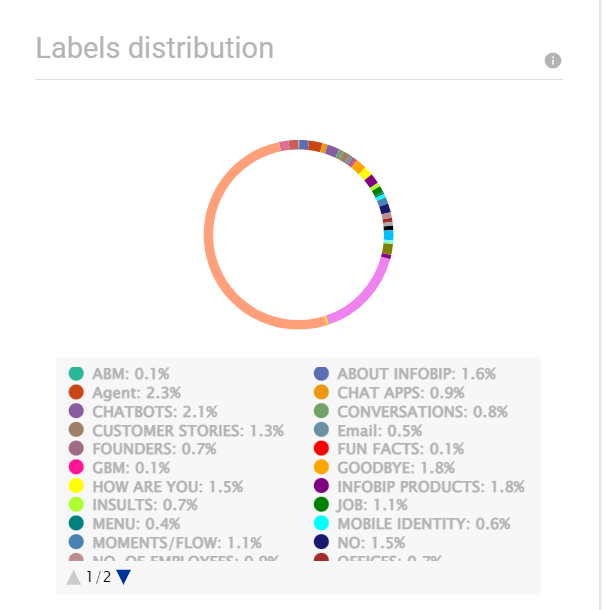

Labels Distribution

The Labels Distribution graph shows the distribution of intents across messages sent by end users. Example: In the following graph, 0.2% of messages from end users are for the ABM intent.

The 'Unknown' label denotes messages for which the intent could not be identified.

Labels Distribution analysis is useful only for well-designed, large chatbots. The analysis will not help with small or poorly-designed chatbots.

Unknown Words

Use the Unknown Words graph to identify how relevant a training dataset is to the end user's message.

When an end user enters a dialog, the chatbot tries to identify the intent of the end user's message. The Unknown Words analysis compares the words in the end user's message with the training dataset for this intent and identifies words that are not part of the training dataset. In such cases, check the Active Learning section for more actionable insights. Example: The Concepts section may contain new concepts, intents, topics, or training phrases; or check the Unknown Labels section.

The graph shows the percentage of messages that contain at least one unknown word. Ideally, this percentage must be less than 20%. When you use the unknown words to retrain your chatbot, this percentage decreases.

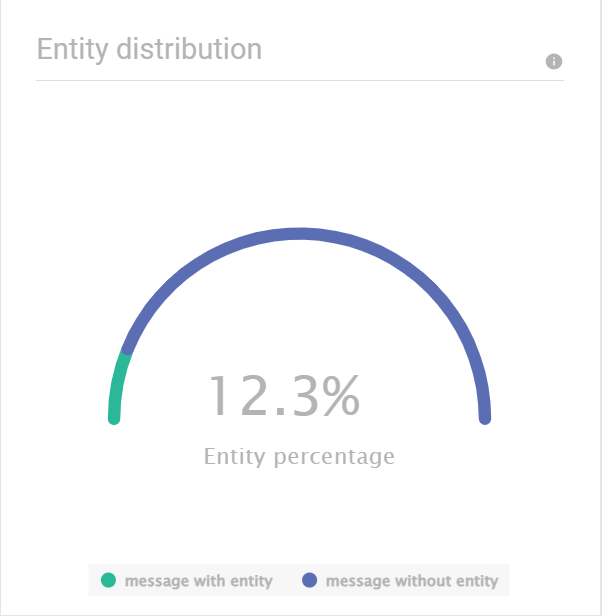

Entity Distribution

The Entity Distribution graph shows the percentage of end user messages that contain entities. Example: In the following graph, 17.5% of end user messages contain entities.

If many end user messages contain entities but you have not enabled the entity feature, consider enabling the feature to improve end user experience.

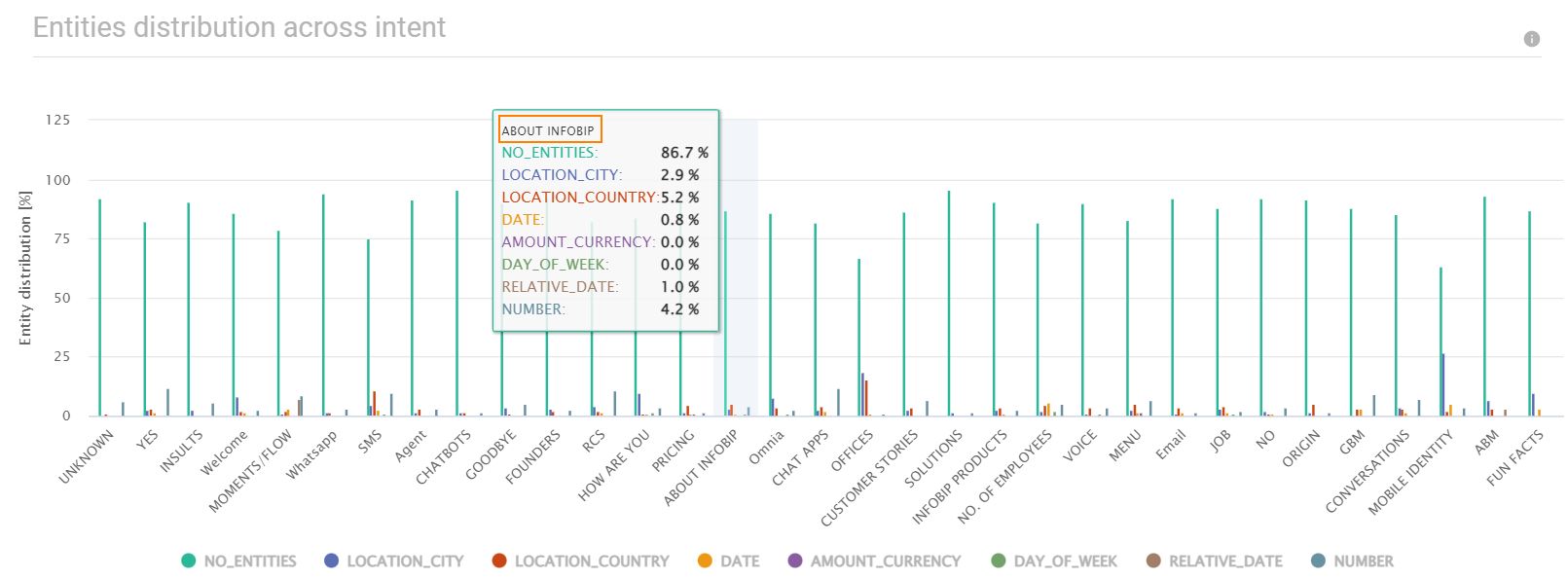

Entity Distribution across Intent

This graph shows the distribution of entities across intents.

The analysis includes only standard entities. It does not include custom entities.

Use this graph to identify the occurrence of entities in end user messages. Example: In the following image, 5.2% of the end user messages for the About_Infobip intent contain the Location_Country entity. This shows that in these messages, the end users have mentioned the country. Similarly, the Location_Country entity is present in messages for other intents. If you add this entity to your chatbot, the chatbot can extract the country from the end user's message and does not have to ask the end user for the country.

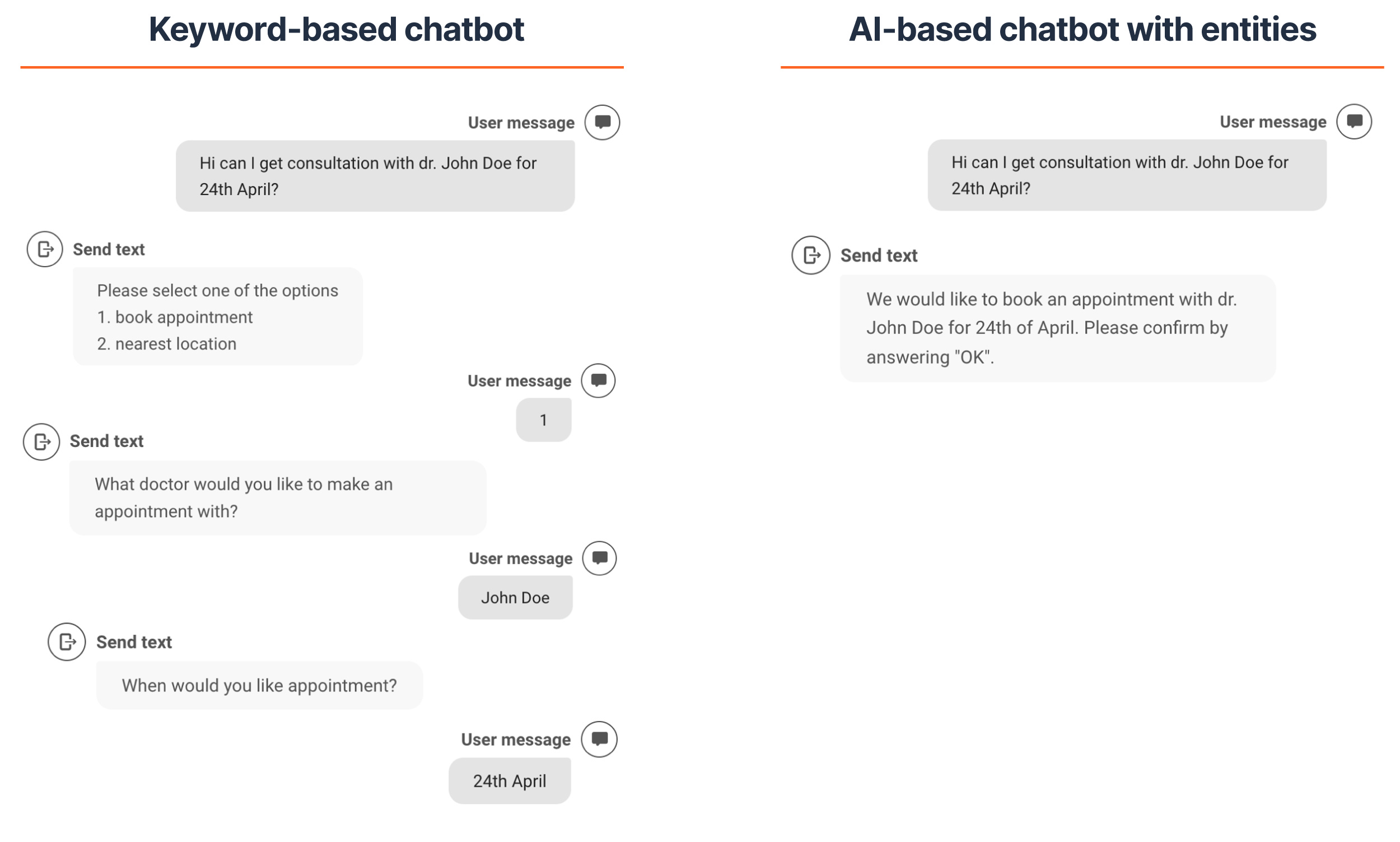

The following image shows the conversation between a chatbot and an end user who wants to book an appointment. The image is for a Keyword-based chatbot and an AI-based chatbot that has entities enabled.

The Keyword chatbot works based on the keywords assigned to it. If the chatbot does not find these keywords in the end user's message, the chatbot uses the default flow. This leads to repetition and poor user experience. In this example, the end user has provided the required information to book an appointment. But because the message does not contain the configured keywords, the chatbot asks the end user for the information.

The AI chatbot recognizes the following entities in the end user's message:

- Person: Dr. John Doe

- Date: 24th April

So, the AI chatbot does not need to ask the end user for the information. The end user can get a faster response and has a better user experience.

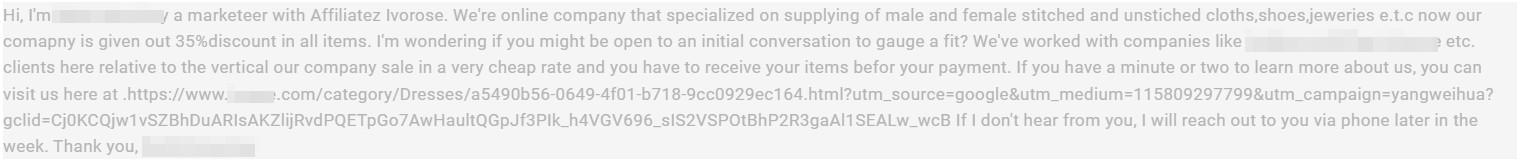

Long Messages

The Long Messages analysis extracts all the long sentences from the conversation between the chatbot and the end user. These messages could be marketing campaigns or other requests that the chatbot is not designed to handle. Business users can evaluate these messages and take relevant action. This analysis is not intended for the chatbot designer but provides an option for business users to improve customer satisfaction.

You can either view the long messages in the Answers web interface or click Download to download the file in .csv format.

Active Learning

Use Active Learning to identify data points that will increase the accuracy of the chatbot. The analysis uses real-life end user data, which is optimal for retraining your chatbot.

The analysis provides the following information:

- Identify the conversation topic from end user messages

- Discover topics that are not handled through intents

- Extract useful messages that you can use to retrain the chatbot

Active learning analysis is done by language. The analysis is performed for each language that is used in 30% or more of the end user messages. The results for each language are displayed in a separate tab.

The languages are displayed at the top of the page. You can view the details about the language distribution in the Data Insights tab.

Active learning is classified as follows:

- Concepts: Detects potential topics from end user messages. You can add these concepts as new intents or use the concepts as training phrases to improve the accuracy of the chatbot.

- Unknown labels: Messages that consist mostly of words that are not seen in the training data. You can review these words and decide whether to add them as new training phrases to existing intents.

- Uncertain decision: Instances where the chatbot is unable to determine the intent of the message. To improve performance, add relevant messages to the training dataset.

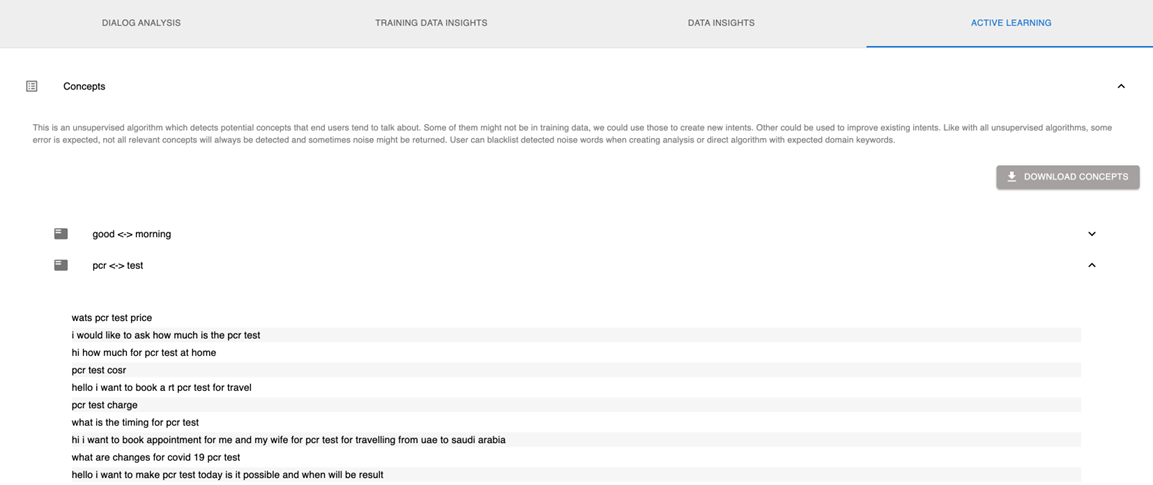

Concepts

This analysis detects pairs of words that represent topics (concepts) in end user messages. Evaluate all messages that are related to the concept and identify ones that are relevant to the chatbot. If the concept is applicable to an existing intent, add the relevant messages to the training dataset of the intent. If the concept is unique, create a new intent, add the relevant messages as training phrases to the new intent, and incorporate the intent into the chatbot.

The following example shows that the analysis identified a new concept, PCR ↔ TEST, which was not in the training dataset.

Because this analysis uses an unsupervised algorithm, the results may not be accurate. The analysis may not detect all the relevant concepts and may also detect irrelevant concepts.

To download the analysis results in .csv format. click Download concepts.

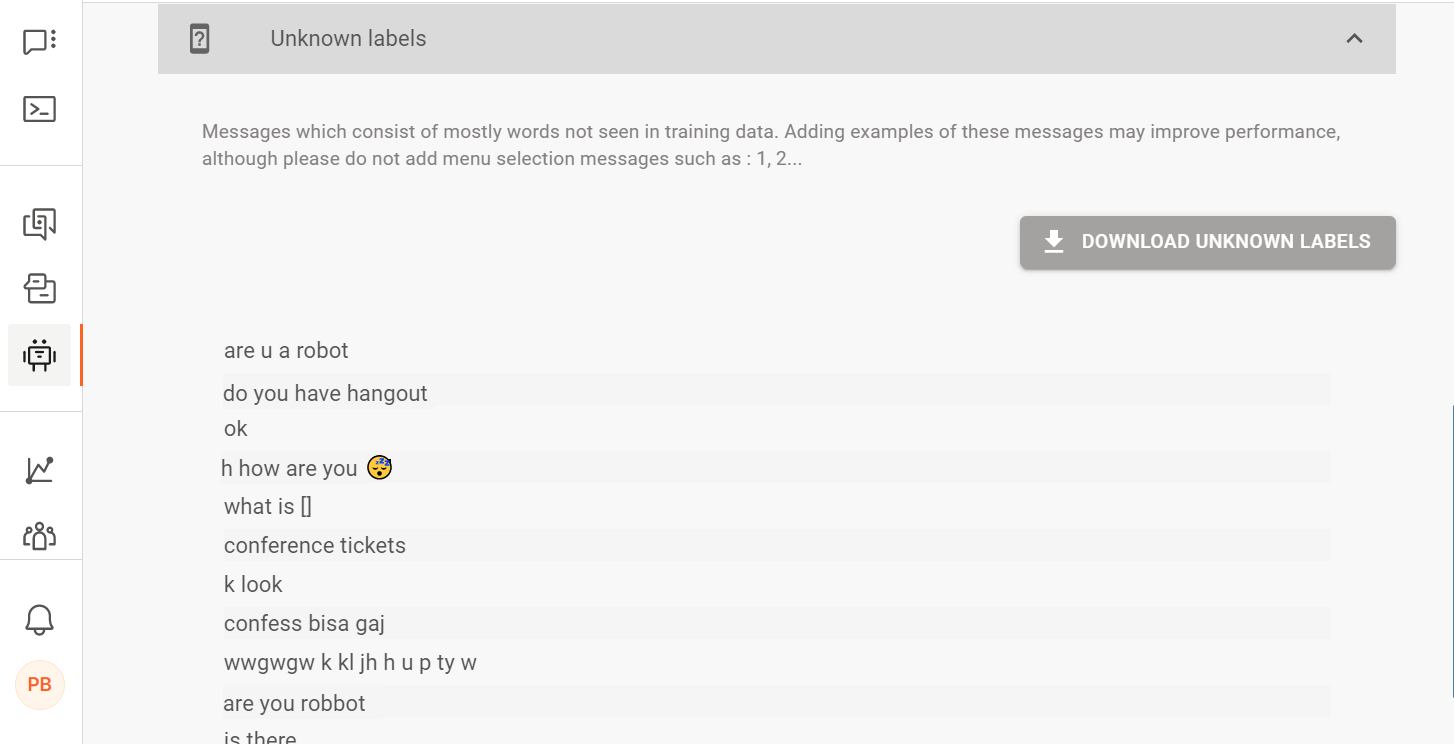

Unknown Labels

This analysis identifies end user messages for which it was unable to identify the intent because most of the words in these messages are not present in the training dataset of any intent. Review these messages and identify ones that are relevant to the chatbot. If the messages are applicable to an existing intent, add these messages to the training dataset of the intent. Otherwise, create a new intent, add the relevant messages as training phrases to the new intent, and incorporate the intent into the chatbot.

To download the results in .csv format. click Download unknown labels.

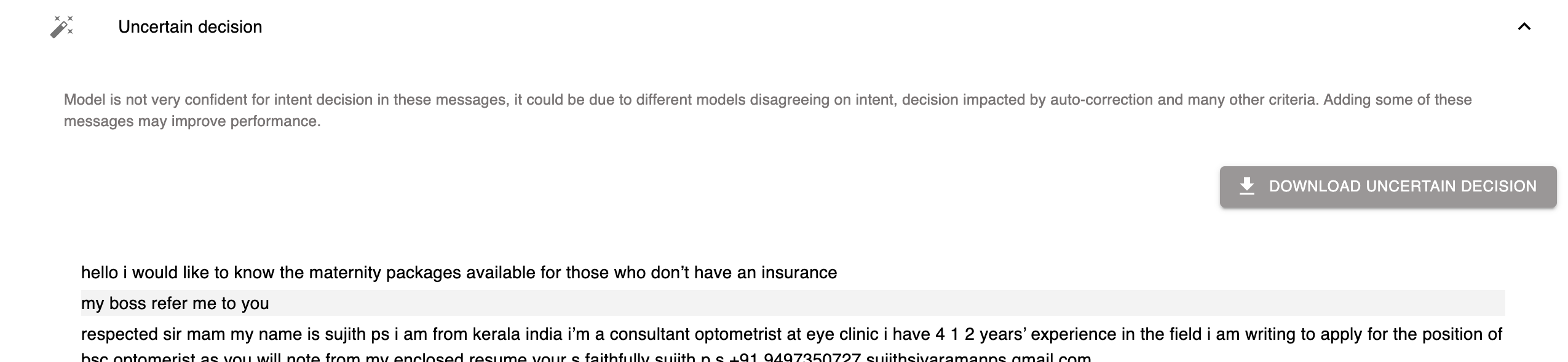

Uncertain Decision

This analysis identifies end user messages for which it was unable to identify the intent. Some of the causes are as follows:

- The analysis uses multiple machine-learning models to identify messages for which these models were not able to conclude on the same intent. Probable causes could be low quality of training dataset or intents with overlapping training phrases. Add these messages as training phrases to the relevant intent.

- The intent that was recognized for the message after autocorrection is different from the intent recognized before autocorrection. Evaluate these messages and add them as training phrases to the correct intent.

To download the results in .csv format. click Download uncertain decision.

Best Practices

The performance of a chatbot depends on the quality as well as quantity of the training dataset. It is important to have a good training dataset so that your chatbot can correctly identify the intent of an end user's message and respond accordingly.

This section explains how to create a good training dataset for your intents.

Create a Good Dataset

You can create a good dataset in the following ways:

- Use the messages sent by end users to your chatbot.

- Buy a dataset from our partner who specialize in creating datasets for AI chatbots. For more information, contact your Infobip Account Manager.

- Use the Active Learning feature in Answers to collect new and relevant data.

Avoid Similar Intents

Make sure that you do not have multiple intents with the same purpose.

Example: There are ten car models - CarA, Car B, Car C, and so on. The intent for each model is as follows:

Intent | Training Phrase | Entity |

|---|---|---|

| Buy_CarA | Which CarA models do you have in stock? Do you have a leasing option for CarA? | CarA |

| Buy_CarB | Which CarB models do you have in stock? Do you have a leasing option for CarB? | CarB |

| Buy_CarC | Which CarC models do you have in stock? Do you have a leasing option for CarC? | CarC |

| ... |

In this example, the purpose of all the intents is the same - buying a specific model of a car.

The training phrases for these intents are also the same. If such overlap or similarity occurs for all the training phrases of the intents, the chatbot may not be able to manage the situation correctly. Example: If the end user message does not contain the car model, the chatbot randomly recognizes any of the intents - Buy_CarA, Buy_CarB, and so on.

To avoid this issue, you can do the following:

- Merge the intents to create a single intent. Example: Buy_Car.

- Create a custom data type for the entity and add the values. Example: model{CarA, CarB, CarC, ...}.

- Create a NER attribute. Example: Car attribute of type model.

- Assign the NER attribute to the intent. Example: Assign Car to Buy_Car.

- Branch the chatbot based on the retrieved entity value. Example: CarA

Avoid Similar or Identical Training Phrases in Discrete Intents

If your intents have different purposes, make sure that you do not have similar or identical training phrases in these intents.

In the following example, the two intents, Model and Product, have different purposes. But the training phrases are similar. So, the chatbot may not be able to identify the correct intent for the end user's message.

Intent | Training Phrase |

|---|---|

| Model | What model is the car? |

| Product | What is the model of the car? |

Use Variations in Training Phrases

In an intent, make sure that the training phrases have variations.

Example: For the Exchange_Currency intent, the training phrases are as follows.

- I would like to change from EUR to USD.

- I would like to change from EUR to GBP.

- I would like to change from USD to EUR.

- I would like to change from USD to GBP.

- I would like to change from GBP to EUR.

- I would like to change from GBP to USD.

If the end user sends a different variation of the message, the chatbot may not be able to identify the intent.

Example: The end user's message is "I want to convert US dollars to British pounds".

This message is a variation of the training phrase, 'I would like to change from USD to GBP.' There are no overlapping words between the end user's message and the training dataset because the chatbot ignores words such as 'I' and 'to'. So, the chatbot is unable to assign the Exchange_Currency intent to the message.

Use Sufficient Number of Training Phrases

If an intent has very few training phrases, the chatbot will not have enough data to learn how to correctly identify the intent. The larger the number of training phrases for an intent, the better the chatbot can identify this intent when an end user sends a relevant message.

To complete validation, you need to add a minimum of 10 training phrases to an intent. This number includes only training phrases and not keywords. Infobip recommends that you add at least 100 training phrases. For the best performance, add at least 400 training phrases.

Create More Training Phrases for Frequently Occurring Words

The number of training phrases for a word should be based on the frequency of its occurrence. If a word is used frequently in end user messages, add more training phrases that contain this word. Example: If the word 'money' is used in 70% of end user messages and the word 'current' is used in only 2% of the messages, create more training phrases that contain the word 'money'.

- How much money is there in my account?

- Show me my money

- How much money do I have?

Balance the Training Dataset Size

If all intents are similar in size, the chatbot will assign the same priority to each intent. A less important intent may be assigned to an end user's message. To avoid this issue, make sure that the training dataset for important intents is larger than that of less important intents. Example: For a bank, the Mortgage intent is more important than the Welcome and Goodbye intents.

Branch based on Entity Types instead of Using Multiple Intents

In some cases, you can branch based on entity type instead of creating multiple intents.

Example: For the following intents, the end users' messages could be similar, with the only difference being the action - book, cancel, or reschedule.

Intent | Training Phrase | Action |

|---|---|---|

| Book_Appointment | I want to book an appointment. Please book an appointment. | Book |

| Cancel_Appointment | I want to cancel my appointment. Please cancel my appointment. | Cancel |

| Reschedule_Appointment | I want to reschedule my appointment. I need to postpone my appointment. I want to move my appointment. | Reschedule |

Instead of using separate intents for each action, you can do the following:

- Merge the intents to create a single intent. Example: Appointment.

- Create custom data types for each action and add the values. Example:

- entity_book = {book, booking}

- entity_cancel = {cancel, canceled}

- entity_reschedule = {move, reschedule, postpone, advance}

- Use named entity recognition (NER) and add these entity types to the intent.

- Branch the chatbot based on the retrieved entity type. Example: entity_book.

Detect Conflicting intents

Use Training Data Insights to identify conflicting intents.

You can then try the following options to resolve the issue:

- Merge intents.

- Branch based on entities. Refer to the examples in the Branch based on Entity Type and Avoid Similar Intents sections.